MemSAM: Innovating Echocardiography Video Segmentation with Temporal and Noise-resilient Techniques for Refined Cardiovascular Diagnostic Imaging

Other Articles

A Novel Approach to Overcoming Challenges in Ultrasound Imaging Using Advanced Memory Prompting

Study conducted by Prof. Harry QINand his research team

Cardiovascular diseases are a leading health concern in Hong Kong, prompting many to undergo regular heart check-ups for their early detection and management. Echocardiography, a key diagnostic imaging tool, plays a crucial role in assessing heart function, offering non-invasive insights into cardiovascular health and aiding in timely intervention. However, interpreting these ultrasound images manually is challenging due to speckle noise and ambiguous boundaries, requiring significant expertise and time. Consequently, a heart check-up is rarely included in a regular annual body check scheme.

As reported in a study published in Computer Vision and Pattern Recognition (CVPR) [1], Prof. Harry QIN of the School of Nursing at The Hong Kong Polytechnic University, and his team have developed a novel model, MemSAM, which aims to revolutionise echocardiography video segmentation by adapting the artificial intelligence (AI) model Segment Anything Model (SAM) from Meta AI to meet the specific demands of medical imaging.

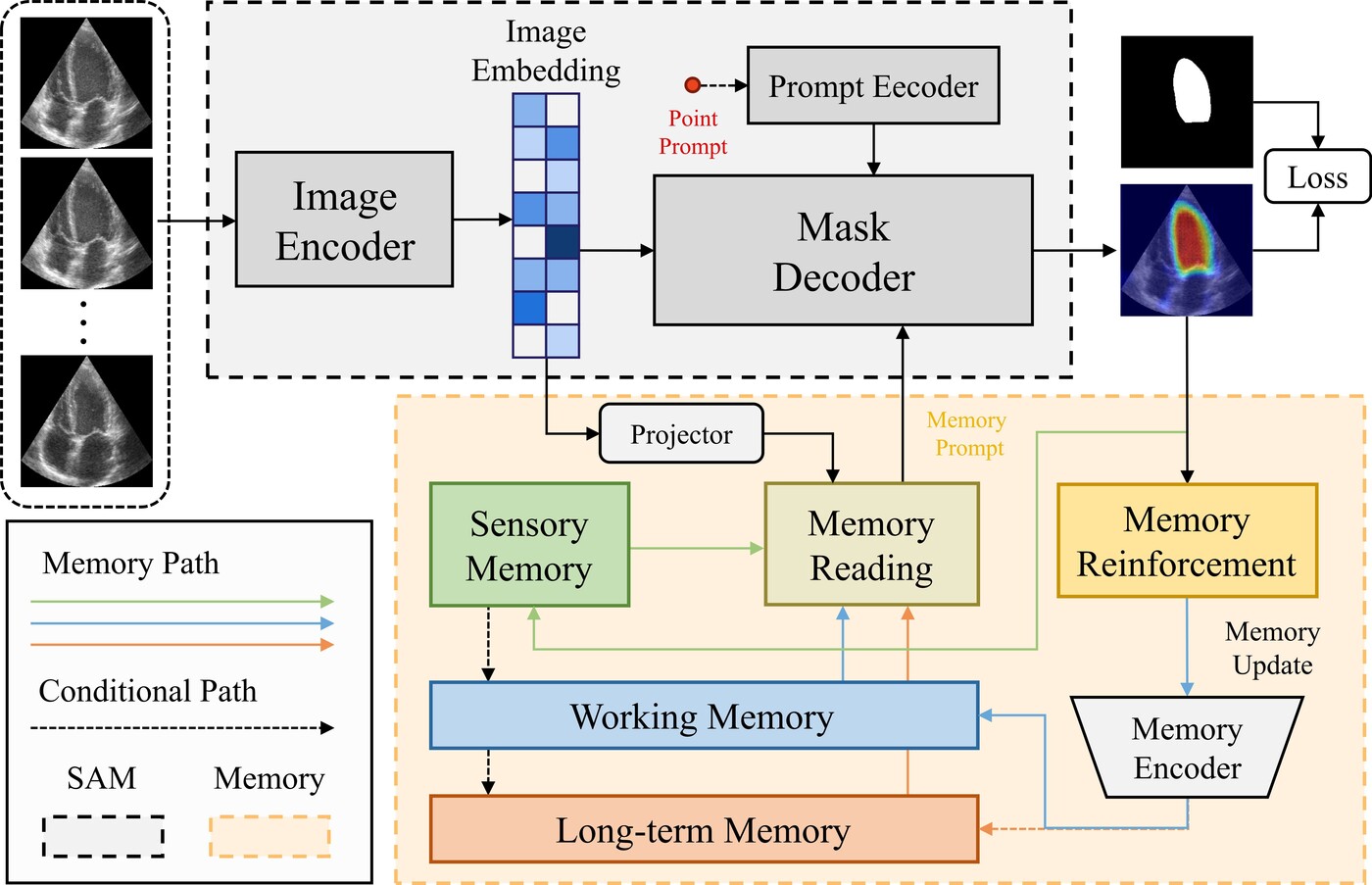

MemSAM introduces a unique approach to echocardiography video segmentation through a temporal-aware and noise-resilient prompting scheme. Released by Meta, SAM is an advanced AI model dedicated to image segmentation which can quickly identify elements in any image and segment the elements. While traditional SAM applications excel in natural image segmentation, their direct application to medical videos has been limited due to the lack of temporal consistency and the presence of significant noise. MemSAM addresses these issues by incorporating a space-time memory mechanism that captures both spatial and temporal information, ensuring consistent and accurate segmentation across video frames. The introduction of MemSAM has the potential to substantially mitigate financial and specialised expertise requirements, potentially alleviating the burden associated with prolonged wait times for advanced cardiac imaging modalities. Furthermore, it could enable the incorporation of simplified cardiac assessments into routine health screenings, enhancing accessibility and early detection.

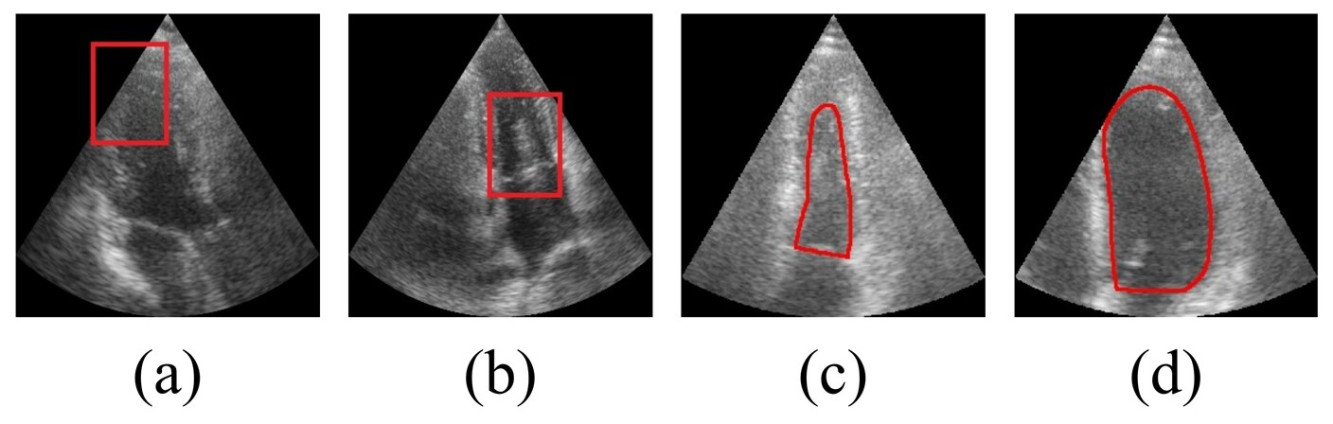

Echocardiography videos are notoriously difficult to segment due to several inherent challenges. The presence of massive speckle noise and numerous artifacts, coupled with the ambiguous boundaries of cardiac structures, complicates the segmentation process. Moreover, the dynamic nature of heart movements results in large variations of target objects across frames (Figure 1). MemSAM's memory reinforcement mechanism enhances the quality of memory prompts by leveraging predicted masks, effectively mitigating the adverse effects of noise and improving segmentation accuracy (Figure 2).

Figure 1. The challenges of echocardiography include:

a) ambiguous boundaries

b) speckle noise and artifacts

c-d) large variations of target objects across frames (captured from the same video)

Figure 2. Overview of MemSAM’s memory reinforcement mechanism

A standout feature of MemSAM is its ability to achieve state-of-the-art performance with limited annotation. In clinical practice, the labour-intensive nature of annotating echocardiographic videos often results in sparse labelling, typically restricted to key frames such as end-systole and end-diastole. MemSAM excels in a semi-supervised setting, demonstrating comparable performance to fully supervised models while requiring significantly fewer annotations and prompts.

MemSAM's efficacy has been rigorously tested on two public datasets, CAMUS and EchoNet-Dynamic, showcasing its superior performance over existing models. The model's ability to maintain high segmentation accuracy with minimal prompts is particularly noteworthy, highlighting its potential to streamline clinical workflows and reduce the burden on healthcare professionals.

The technology behind MemSAM is rooted in the integration of SAM with advanced memory prompting techniques. SAM, known for its powerful representation capabilities, has been adapted to handle the unique challenges posed by medical videos. The core innovation lies in the temporal-aware prompting scheme, which utilises a space-time memory to guide the segmentation process. This memory contains both spatial and temporal cues, allowing the model to maintain consistency across frames and avoid the pitfalls of misidentification caused by mask propagation.

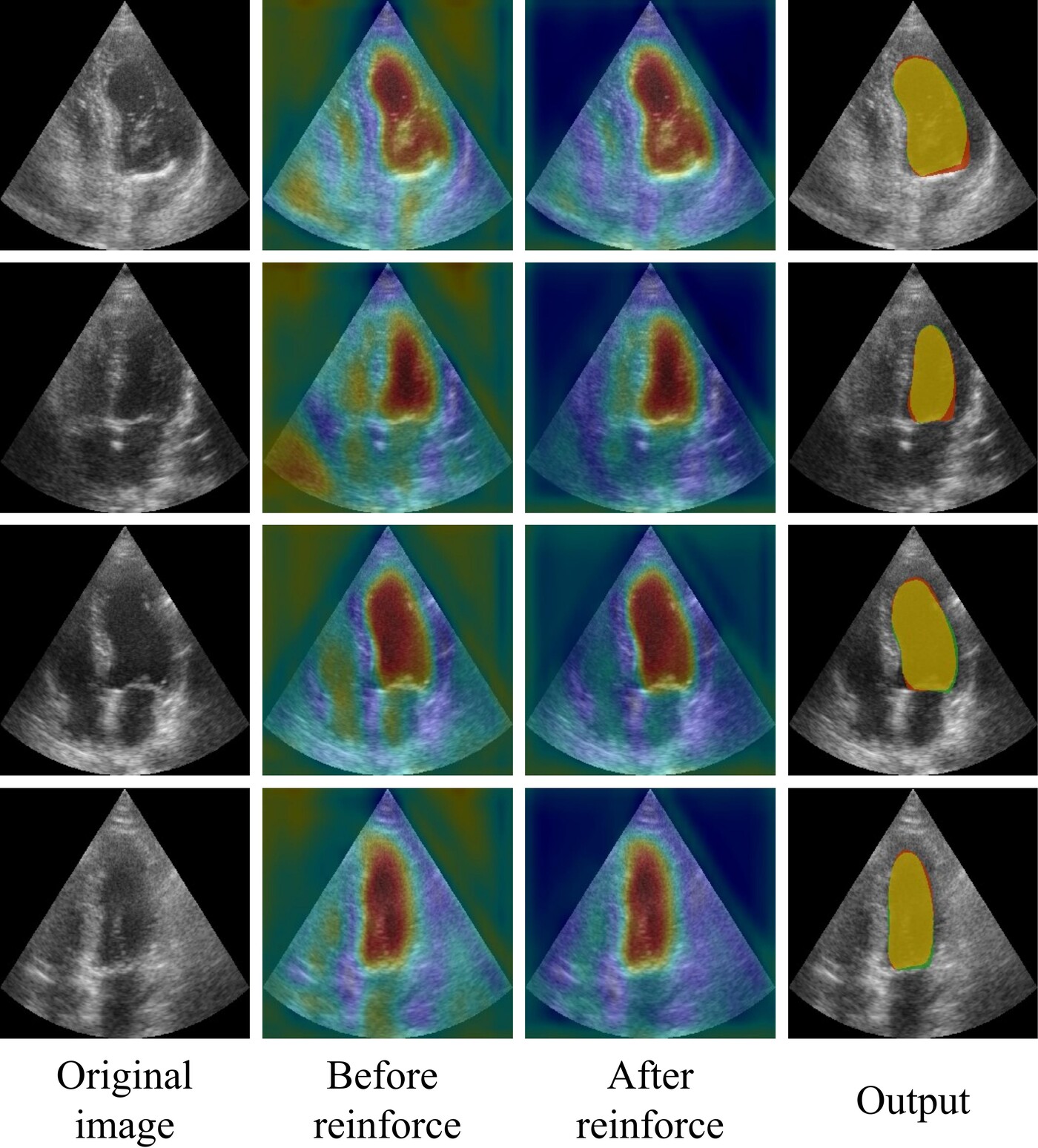

The memory reinforcement mechanism is another critical component of MemSAM. Ultrasound images are often plagued by complex noise, which can degrade the quality of image embeddings. To counter this, MemSAM employs a reinforcement strategy that uses segmentation results to emphasise foreground features and reduce the impact of background noise (Figure 3). This approach not only enhances the discriminability of feature representations but also prevents the accumulation and propagation of errors in the memory.

Figure 3. Feature representations before and after reinforcement. The model emphasises the ventricular area.

MemSAM's architecture is built on SAMUS [2], a medical foundation which is in turn a model based on SAM and optimised for medical-used images. The model processes videos in a sequential frame-by-frame manner, relying on memory prompts rather than external prompts for subsequent frames. This design minimises the need for dense annotations and external prompts, making it particularly suited for semi-supervised tasks.

While MemSAM represents a significant leap forward in echocardiography video segmentation, future research aims to enhance the model's robustness, particularly in scenarios where initial frame quality is poor. Additionally, exploring the application of MemSAM across other medical imaging domains and optimising its computational efficiency are exciting avenues for further development.

MemSAM not only addresses the longstanding challenges of ultrasound video segmentation but also sets a new benchmark for integrating advanced machine learning techniques into medical imaging. By bridging the gap between cutting-edge technology and clinical application, MemSAM holds the promise of improving diagnostic accuracy and patient outcomes in cardiovascular care. This innovative model exemplifies the potential of AI to revolutionise healthcare, offering a glimpse into a future where automated, accurate and efficient diagnostic tools are the norm. The source of this study is open at http://githum.com/dengx10520/MemSAM.

Prof. Qin’s research paper published in CVPR has been shortlisted as one of the 24 best paper nominations among 2,719 accepted papers. Prof. Qin was ranked as among the top 2% most-cited scientists worldwide (career-long) by Stanford University in the field of artificial intelligence and image processing in 2024, and as among the top 2% most-cited scientists worldwide (single-year) for five consecutive years, from 2020 to 2024.

| References |

|---|

[1] X. Deng, H. Wu, R. Zeng, and J. Qin, MemSAM: Taming Segment Anything Model for Echocardiography Video Segmentation, CVPR 2024, June 2024.

[2] Xian Lin, Yangyang Xiang, Li Zhang, Xin Yang, Zengqiang Yan, and Li Yu. Samus: Adapting segment anything model for clinically-friendly and generalizable ultrasound image segmentation. arXiv preprint arXiv:2309.06824, 2023.

| Prof. Harry QIN |