Deep Learning Translates Colour Fundus Photography into Realistic Indocyanine Green Angiography for AMD Screening

Other Articles

A Novel Approach to Non-Invasive Choroidal Imaging Using GANs

Study conducted by Prof. Mingguang HEand his research team

Age-related macular degeneration (AMD) is a leading cause of vision impairment among the elderly, necessitating effective screening tools to manage its progression. Late-stage AMD is categorised into atrophic (dry) AMD and neovascular (wet) AMD. Dry AMD may progress to wet AMD. We may differentiate them by presence of choroidal neovascularisation (CNV).

Traditionally, Indocyanine Green Angiography (ICGA) has been the gold standard for detecting chorioretinal diseases, offering unparalleled insights into the choroidal vasculature. ICGA's ability to dynamically visualise deeper choroidal structures and lesions behind the retinal pigment epithelium makes it invaluable in distinguishing wet AMD from other chorioretinal conditions. During the process of taking ICGA, the water-soluble cyanine dye indocyanine green (ICG) is injected into the patient’s body as a contrast agent, and hence its invasive nature, coupled with potential adverse reactions such as nausea, vomiting and hypotensive shock, limits its routine clinical application. The complex procedures involved in ICGA further hinder its widespread use, creating a need for alternative methods that can offer similar diagnostic capabilities without the associated risks.

Meanwhile, Colour Fundus (CF) photography, while non-invasive and widely used, provides limited information due to its inability to visualise deeper choroidal structures. CF images often suffer from unstable quality and are unable to distinguish between characteristics common to several chorioretinal diseases, leading to ambiguous diagnoses when used alone. This limitation underscores the necessity for innovative solutions that can enhance the diagnostic power of CF photography, making it a more reliable tool for AMD screening.

In a study published in npj Digital Medicine, a groundbreaking solution leveraging deep learning to translate CF images into realistic ICGA images is introduced by Prof. Mingguang HE, Director of the Research Centre for SHARP Vision and Chair Professor of Experimental Ophthalmology at The Hong Kong Polytechnic University, and his research team [1]. This innovation addresses the limitations of both ICGA and CF, offering a non-invasive, comprehensive, portable and easy-to-use tool for wet AMD screening. Eventually the team hopes that this novel invention could be applied widely to help shorten the waiting time for retinal examinations and that appropriate treatment can be applied in a timely manner to relieve the pressure on the public healthcare services’ primary eye care sector.

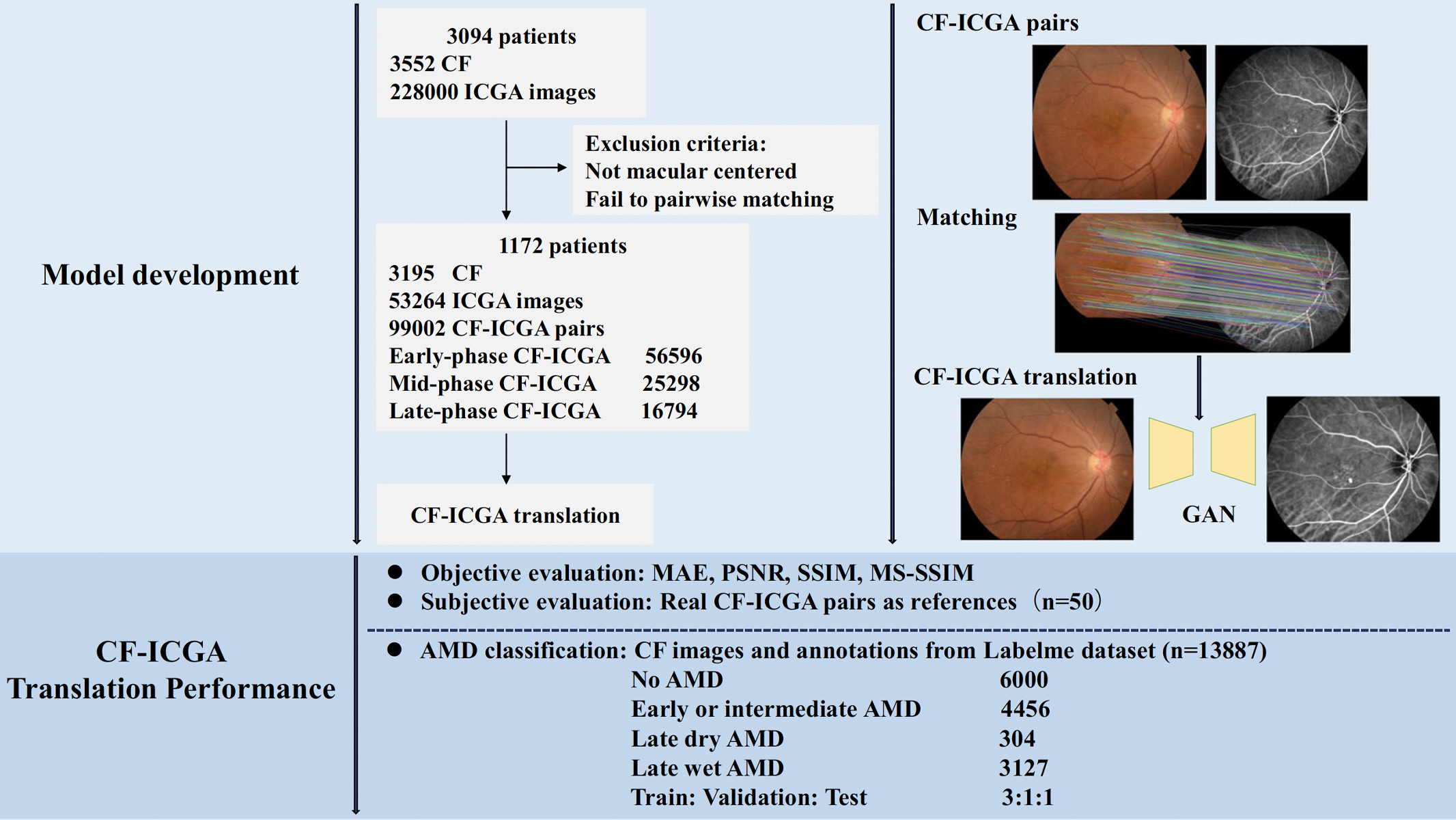

The core of the methodology lies in the sophisticated use of the deep-learning architecture, Generative Adversarial Networks (GANs), which comprise two neural networks—the generator and the discriminator—working in tandem to produce realistic images. To develop the model, 3,195 CF images and 53,264 ICGA images from 1,172 patients were collected. The team then matched each ICGA image with each CF image, and a substantial dataset of 99,002 CF-ICGA pairs were utilised, in which 56,596 pairs are in early-phase, 25,298 are in mid-phase and 16,794 are in late-phase of ICGA. CF-ICGA translation images were then generated and evaluated. The flow chart of the study is shown in Figure 1.

Figure 1. Flow chart of the study

During the image generation process, the masking effect of the retinal pigment epithelium and the intricate anatomical structure of the choroid presented significant challenges in generating realistic images. Pix2PixHD, a variant of conditional GANs, excels in image-to-image translation by minimising adversarial loss and pixel-reconstruction error. This model's ability to handle high-resolution images and extract fine details makes it ideal for the task. To further enhance image quality, the team incorporated Gradient Variance Loss, which sharpens edges and preserves high-frequency details, ensuring the generated ICGA images are both accurate and detailed.

The generated ICGA images underwent rigorous objective and subjective evaluation. Objective metrics such as Mean Absolute Error (MAE), Peak Signal-to-Noise Ratio (PSNR), and Structural Similarity Index (SSIM) were employed to quantify image fidelity. The results were promising, with SSIM values ranging from 0.57 to 0.65, indicating a high degree of similarity to real ICGA images. These metrics reflect the model's ability to generate images that closely resemble the anatomical and pathological features seen in authentic ICGA images.

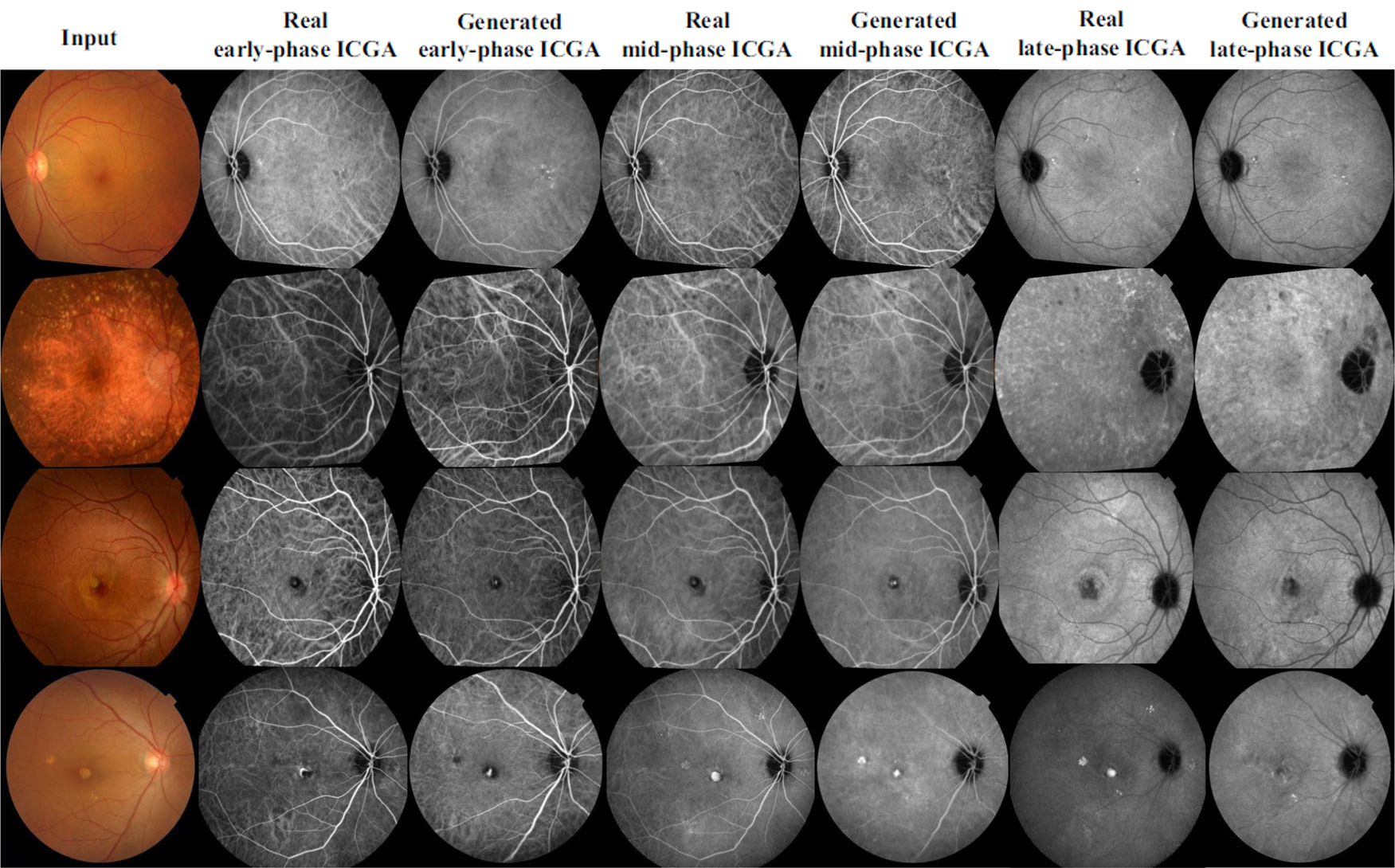

Subjective evaluation, conducted by two experienced ophthalmologists, further validated the quality of the synthesised images. Using a five-point scale, the ophthalmologists assessed the anatomical accuracy and pathological detail of the images. Image examples are shown in Figure 2. For the internal test set, the generated ICGA images are very close to the real ICGA images visually, and so a blinded evaluation was conducted. The generated ICGA images received scores close to those of real ICGA images, with a Cohen’s kappa value indicating excellent inter-rater agreement. For the external test set, as the differences in image characteristics between the training dataset and the external dataset were clearly noticeable visually, the blinded evaluation was not conducted. Nevertheless, this subjective validation underscores the potential of the model to produce clinically relevant images that can aid in accurate AMD diagnosis.

Figure 2. Examples of real ICGA and translated ICGA images. The 1st row shows a case of early dry AMD, the 2nd row shows a case of intermediate dry AMD, the 3rd row shows a case of wet AMD, and the 4th row shows a case of wet AMD. Rows 1-3 are internal test set, and row 4 is external test set.

The implications of this research are profound. By integrating generated ICGA images with CF images, the team significantly improved the accuracy of AMD classification, as demonstrated by an increase in the area under the ROC curve (AUC) from 0.93 to 0.97. This enhancement in diagnostic accuracy highlights the potential of this approach to serve as a valuable tool in population-based AMD screening, offering a non-invasive alternative to traditional ICGA. The addition of generated ICGA images reduced error rates across various AMD categories, demonstrating the model's effectiveness in improving diagnostic precision.

Prof. He's research represents a significant advancement in the field of ophthalmology, offering a novel, non-invasive method for choroidal imaging. By harnessing the power of deep learning and GANs, the team has demonstrated the feasibility of generating realistic ICGA images from CF images. Prof. He also applied deep learning technology to his invention—a portable, self-testing retinal fundus camera, for detecting retinal and optic nerve diseases such as diabetic retinopathy (diabetic eye) and glaucoma from CF images. Cooperation with the optical company OPTICAL 88 for data collection and offering primary eye care to the public is ongoing. Moving forward, further validation in real-world clinical settings will be crucial to fully realise the potential of this innovative approach in improving patient outcomes.

The data used for model development of this study are not openly available due to reasons of privacy but are available from the corresponding author upon reasonable request. The AMD dataset used for external validation is located on a controlled access data platform. Interested researchers can contact Prof. He for more information.

The research is supported by the Start-up Fund for Research assistant Professors under the Strategic Hiring Scheme (Grant Number: P0048623) and the Hong Kong SAR Global STEM Professorship Scheme (Grant Number: P0046113).

Prof. He has been recognised by Stanford University as one of the top 2% most-cited scientists worldwide (career-long) in the field of ophthalmology & optometry for five consecutive years, from 2020 to 2024, and one of the top 2% most-cited scientists worldwide (single-year) for six consecutive years, from 2019 to 2024. Prof. He founded and served as the first president of the Asia Pacific Tele-Ophthalmology Society and is a founding council member of the Asia Pacific Myopia Society and Deputy Secretary-General for the Asia Pacific Academy of Ophthalmology. He is also the STEM Scholar under HKSARG’s Global STEM Professorship Scheme and a Henry G. Leong Professor in Elderly Vision Health. Prof. He currently serves as the Director of the Research Centre for SHARP Vision, Director of Jockey Club STEM Lab, and the Chair Professor of Experimental Ophthalmology at The Hong Kong Polytechnic University.

| References |

|---|

[1] Chen, R., Zhang, W., Song, F., Yu, H., Cao, D., Zheng, Y., He, M. & Shi, D. (2024). Translating color fundus photography to indocyanine green angiography using deep-learning for age-related macular degeneration screening. npj Digital Medicine, 7, 34 (2024). https://doi.org/10.1038/s41746-024-01018-7

| Prof. Mingguang HE |