A Progressive Evolution in Virtual MRI Imaging for Tumour Detection

Other Articles

Exploring Innovative Deep Learning Models for Safer and More Accurate Diagnostics

Study conducted by Prof. Jing CAIand his team

Nasopharyngeal carcinoma (NPC) is a challenging malignancy due to its location in the nose-pharynx, a complex area surrounded by critical structures such as the skull base and cranial nerves. This cancer is particularly prevalent in Southern China, occurring at a rate 20 times higher than in non-endemic regions of the world, and it therefore poses significant health burdens. The infiltrative nature of NPC makes accurate imaging crucial for effective treatment planning, particularly for radiation therapy, which is the primary treatment modality. Traditionally, contrast-enhanced magnetic resonance imaging (MRI) using gadolinium-based contrast agents (GBCAs) has been the gold standard for delineating tumour boundaries. However, the use of GBCAs carries risks, prompting the need for safer imaging alternatives.

Gadolinium is used in MRI as a contrast agent due to its ability to enhance the visibility of internal structures. This is particularly useful in NPC, where the tumour's infiltrative nature requires precise imaging to distinguish it from surrounding healthy tissues. However, it also poses significant health risks. These include nephrogenic systemic fibrosis which is a serious condition associated with gadolinium exposure leading to fibrosis of the skin, joints and internal organs, causing severe pain and disability. Furthermore, recent studies have shown that gadolinium can accumulate in the brain, which raises concerns about its long-term effects.

Prof. Jing CAI, Head and Professor of the Department of Health Technology and Informatics at The Hong Kong Polytechnic University, has been seeking methods to eliminate the use of GBCAs, especially investigating the use of deep learning for virtual contrast enhancement (VCE) in MRI. In a paper published in International Journal of Radiation Oncology, Biology, Physics in 2022, Prof. Cai and his research team reported development of the Multimodality-Guided Synergistic Neural Network (MMgSN-Net) [1]. In 2024, he further developed the Pixelwise Gradient Model with Generative Adversarial Network (GAN) for Virtual Contrast Enhancement (PGMGVCE), as reported in Cancers [2].

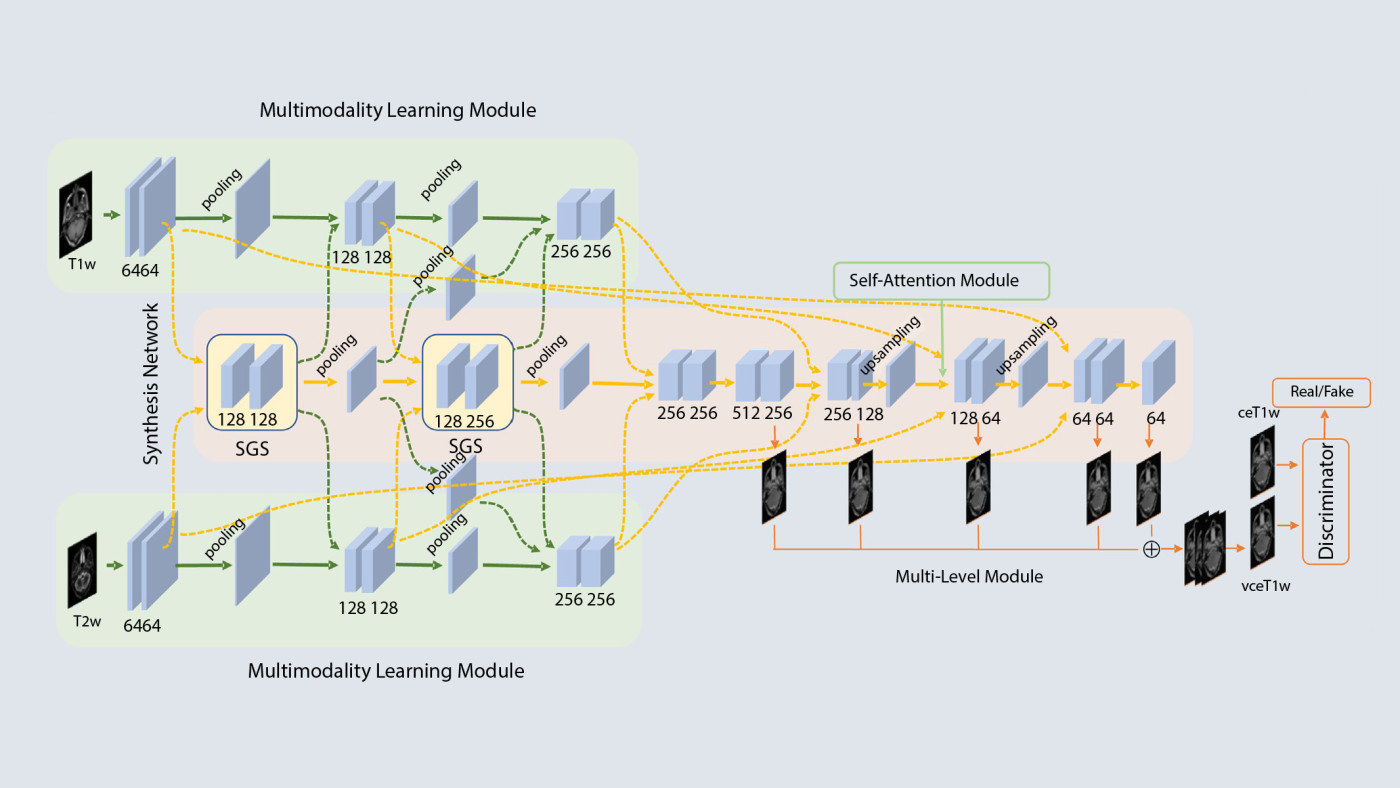

MMgSN-Net represented a significant leap forward in synthesising virtual contrast-enhanced T1-weighted MRI images from contrast-free scans, leveraging complementary information from T1-weighted and T2-weighted images to produce high-quality synthetic images. Its architecture includes a multimodality learning module, a synergistic guidance system, a self-attention module, a multi-level module and a discriminator, all working in concert to optimise feature extraction and image synthesis (Figure 1). It is designed to unravel tumour-related imaging features from each input modality, overcoming the limitations of single-modality synthesis. The synergistic guidance system plays a crucial role in fusing information from T1- and T2-weighted images, enhancing the network's ability to capture complementary features. Additionally, the self-attention module helps preserve the shape of large anatomical structures, which is particularly important for accurately delineating the complex anatomy of NPC.

Figure 1. MMgSN-Net framework

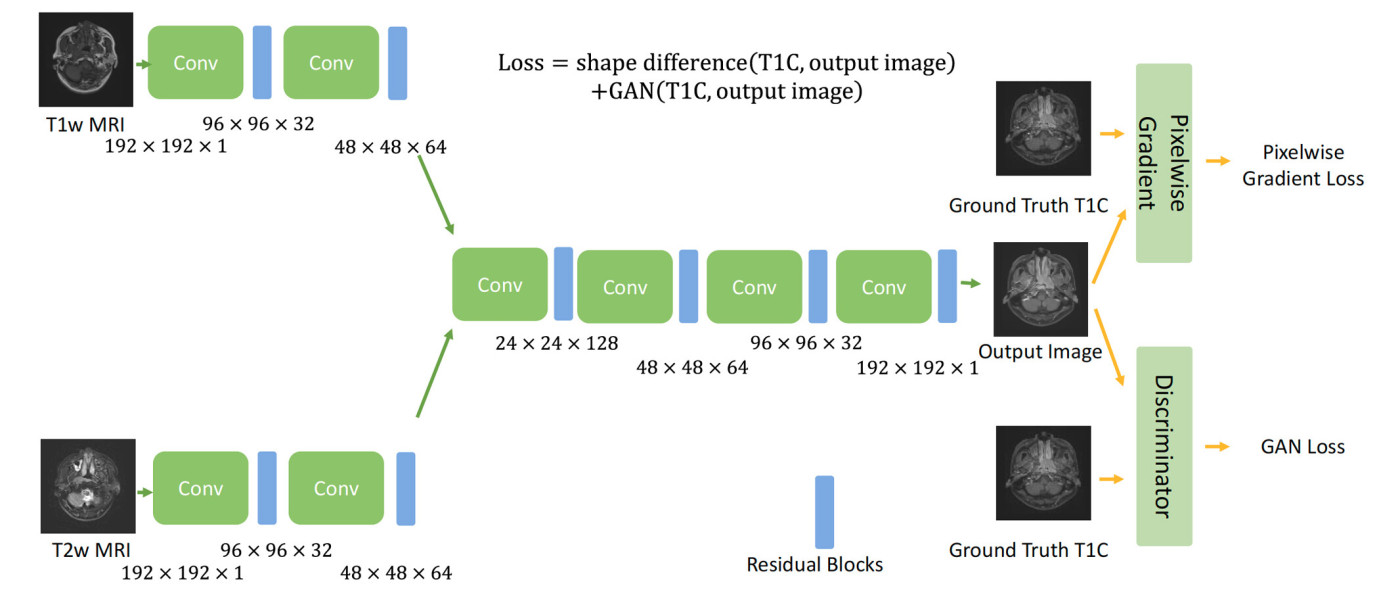

Building on the foundation laid by MMgSN-Net, the PGMGVCE model introduces a novel approach to VCE in MRI imaging. This model combines pixelwise gradient methods with GAN, a deep-learning architecture, to enhance the texture and detail of synthetic images (Figure 2). A GAN comprises two parts: a generator that creates synthetic images and a discriminator that evaluates their authenticity. The generator and discriminator work together, with the generator improving its outputs based on feedback from the discriminator. In the proposed model, the pixelwise gradient method, originally used in image registration, is adept at capturing the geometric structure of tissues, while GANs ensure that the synthesised images are visually indistinguishable from real contrast-enhanced scans. The PGMGVCE model architecture is designed to integrate and prioritise features from T1- and T2-weighted images, leveraging their complementary strengths to produce high-fidelity VCE images.

Figure 2. PGMGVCE framework. The multiplications in the figure indicate the dimension and number of channels in that layer.

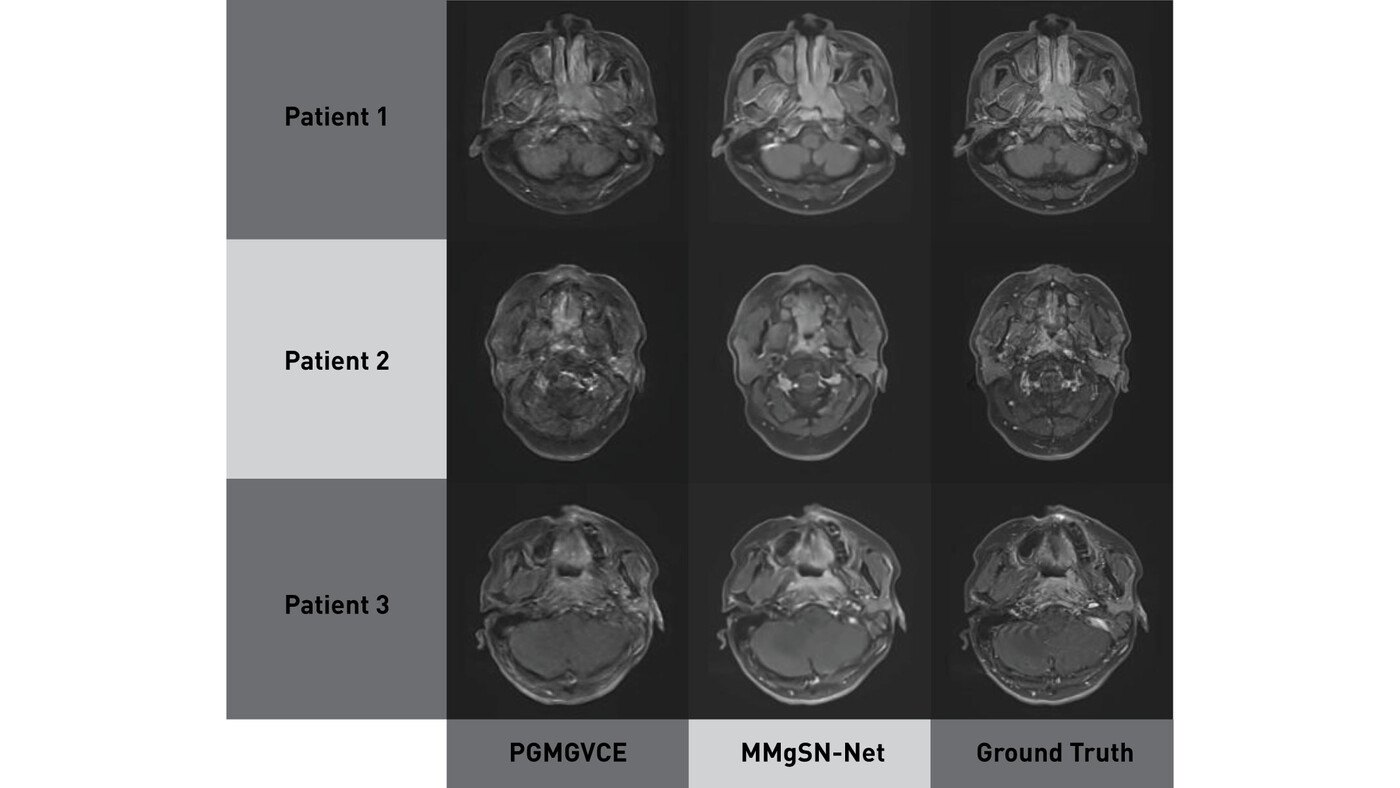

In comparative studies, PGMGVCE demonstrated similar accuracy to MMgSN-Net in terms of mean absolute error (MAE), mean square error (MSE), and structural similarity index (SSIM). However, it excelled in texture representation, closely matching the texture of ground-truth contrast-enhanced images, while with MMgSN-Net the texture appears to be smoother (Figure 3). This was evidenced by improved metrics such as total mean square variation per mean intensity (TMSVPMI) and Tenengrad function per mean intensity (TFPMI), which indicates a more realistic texture replication. The ability of PGMGVCE to capture intricate details and textures suggests its superiority over MMgSN-Net in certain aspects, particularly in replicating the authentic texture of T1-weighted images with contrast (T1C images).

Figure 3. Results of PGMGVCE, MMgSN-Net and ground truth. The texture of MMgSN-Net is smoother than that of the ground truth qualitatively.

Fine-tuning the PGMGVCE model involved exploring various hyperparameter settings and normalisation methods to optimise performance. The study found that a 1:1 ratio of pixelwise gradient loss to GAN loss yielded optimal results, balancing the model's ability to capture both shape and texture. Additionally, different normalisation techniques, such as z-score, Sigmoid and Tanh, were tested to enhance the model's learning and generalisation capabilities. Sigmoid normalisation emerged as the most effective, slightly outperforming the other methods in terms of MAE and MSE.

Another aspect of the study involved evaluating the performance of the PGMGVCE model when trained with single modalities, either T1-w or T2-w images. The results indicated that using both modalities together provided a more comprehensive representation of the anatomy, leading to improved contrast enhancement when compared to using either modality alone. This finding highlights the importance of integrating multiple imaging modalities to capture the full spectrum of anatomical and pathological information.

The implications of these findings are significant for the future of MRI imaging in NPC. By eliminating reliance on GBCAs, these models offer a safer alternative for patients, particularly those with contraindications to contrast agents. Moreover, the enhanced texture representation achieved by PGMGVCE could lead to improved diagnostic accuracy, aiding clinicians in better identifying and characterising tumours.

Future research should focus on expanding these models' training datasets and incorporating additional MRI modalities to further enhance their diagnostic capabilities and generalisability across diverse clinical settings. As these technologies continue to evolve, they hold the potential to transform the medical imaging landscape, offering safer and more effective tools for cancer diagnosis and treatment planning.

The MMgSN-Net research was partly supported by Hong Kong SAR research grants GRF 151022/19M, ITS/080/19. The PGMGVCE research was partly supported by research grants from the Shenzhen Basic Research Program (JCYJ20210324130209023) of the Shenzhen Science and Technology Innovation Committee, Project of Strategic Importance Fund (P0035421) and RISA Projects (P0043001) from The Hong Kong Polytechnic University, Mainland–Hong Kong Joint Funding Scheme (MHP/005/20) and Health and Medical Research Fund (HMRF 09200576), the Health Bureau, The Government of the Hong Kong Special Administrative Region.

The code of MMgSN-Net is publicly available for noncommercial research purposes at https://github.com/WenLi-PolyU/MMgSN-Net-for-vce-MRI-synthesis.

Prof. Cai was ranked among the top 2% most-cited scientists worldwide (single-year) by Stanford University in the field of nuclear medicine & medical imaging for two consecutive years, from 2023 to 2024. Currently, Prof. Cai serves as the Head of the Department of Health Technology and Informatics of The Hong Kong Polytechnic University.

| References |

|---|

[1] Li, W.; Xiao, H.; Li, T.; Ren, G.; Lam, S.; Teng, X.; Liu, C.; Zhang, J.; Lee, F.K.; Au, K.H.; et al. Virtual contrast-enhanced magnetic resonance images synthesis for patients with nasopharyngeal carcinoma using multimodality-guided synergistic neural network. Int. J. Radiat. Oncol. Biol. Phys. 2022, 112, 1033–1044. https://doi.org/10.1016/j.ijrobp.2021.11.007

[2] Cheng, K.-H., Li, W., Lee, F. K.-H., Li, T., & Cai, J. (2024). Pixelwise Gradient Model with GAN for Virtual Contrast Enhancement in MRI Imaging. Cancers, 16(5), 999. https://doi.org/10.3390/cancers16050999

| Prof. Jing CAI |