Powering the Last Mile of GenAI

PolyU’s research achieves significant cost reduction in training domain-specific GenAI, democratising AI development and paving the way for Artificial General Intelligence (AGI)

AI is rapidly transforming industries and academia, yet its integration into specialised domains faces challenges. Generative Artificial Intelligence (GenAI) models like GPT and DeepSeek excel at general tasks but lack consistent precision in vertical domains, producing responses without granular technical depth or alignment with specialised standards. In highly specialised scenarios such as healthcare, such models still struggle to achieve reliable accuracy levels.

On the other hand, domain experts, such as university researchers and professional practitioners, struggle with limited computing resources to train models at scale for their fields. Fragmented data, privacy, and copyright restrictions pose further hurdles.

Professor Yang Hongxia, Executive Director of the PolyU Academy for Artificial Intelligence (PAAI), led a research team to discover a novel approach to training and building highly robust, decentralised GenAI models for individual domains at extremely low cost. The team also open-sourced the training framework, enabling more contributors to add domain knowledge.

Decentralising and merging

“Domain experts possess numerous high-quality, domain-specific data, which the major AI players such as OpenAI will not find on the Internet,” Professor Yang said. “PAAI was established to iron out the hurdles for universities and professional organisations such as hospitals and financial enterprises to make full use of their proprietary data to harness AI for knowledge discovery and effective applications.”

Professor Yang’s breakthrough introduces a two-pronged innovation. The decentralised “Collaborative Generative Artificial Intelligence” (Co-GenAI) platform developed by her team uses low-bit training to significantly reduce the computational resources required for training domain-specific models without compromising performance, creating favourable conditions for the development of small, specialised models.

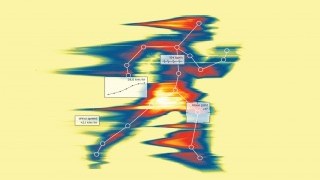

Furthermore, Professor Yang’s team uses a model fusion technique to merge domain-specific models, so that the model can obtain new domain-specific knowledge at a very low cost while retaining existing knowledge. It is proved that this innovative collaborative training method can efficiently merge multiple models and form a comprehensive domain-specific GenAI model without relying on centralised computing resources. Under a “co-build models, keep data local” paradigm, their innovation, supporting cross-institution, cross-disciplinary collaboration, has proved to surpass state-of-the-art models across 11 widely adopted benchmarks in reasoning, coding, mathematics, and instruction-following. By dramatically reducing reliance on high-end Graphic Processing Unit (GPU) clusters, the team has opened the door for domain experts to actively participate in the training process and shape GenAI innovation.

Ultra-low-resource foundation model training, combined with efficient model fusion, enables academic researchers worldwide to advance GenAI research through collaborative innovation.

~ Professor Yang Hongxia

Delving deeperTraining a GenAI model typically involves pretraining and post-training phases. Pretraining is the foundational stage where the model learns general patterns, language structures, and world knowledge from extensive, unlabelled datasets, requiring significant computational resources. This equips the model with broad capabilities, which are then refined through fine-tuning on smaller, task-specific datasets for applications like chatbots or translation. Post-training occurs after pretraining, focusing on further enhancing the model’s performance, safety, and alignment with user expectations. Techniques such as supervised fine-tuning, preference alignment and reinforcement learning help ensure the model’s outputs are accurate, reliable, and ethically sound.

Centralised training of GenAI models typically demands millions of GPU hours—a level of computational power accessible only to a handful of organisations. In contrast, Professor Yang’s Co-GenAI platform enables the training of local models using ultra-low resources. While most centralised models rely on floating point (FP) 16 precision, her team has pioneered an FP8 training pipeline that spans both continual pre-training and post-training stages. This approach reduces computing time by 22%, while maintaining model performance. By using this cutting-edge technique, PAAI places PolyU among the few institutions globally capable of mastering such an innovation.

Complementing this breakthrough is the team’s model fusion strategy, which transfers knowledge from multiple source models into a single pivot model simultaneously. Using just 160 GPU hours, the team successfully merged four state-of-the-art models—including Qwen-2.5-14B-Instruct and Phi-4—avoiding the need for million-scale training budgets. The resulting fused models consistently outperform their original counterparts across key benchmarks, achieving superior performance with a fraction of the computational cost. |

Applications in the medical domain

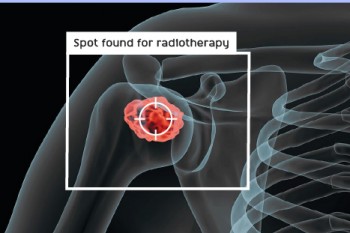

PAAI has already kicked off its support for medical-related research. It is achieving this goal in collaboration with leading hospitals, including Queen Elizabeth Hospital and Sun Yat-sen University Cancer Center, in developing a cancer foundation model.

Among the many tasks enabled by the cancer foundation model is the identification of target areas for radiotherapy. It is expected that Professor Yang’s model can save two-thirds of a medical doctor’s time spent on a case. With the decentralisation strategy, Professor Yang envisions the development of a powerful and reliable GenAI model for the medical sector at a low cost, while ensuring the protection of medical data and patients’ privacy.

We are dealing with an immense quantity of medical data, including not only texts but also images and videos. If we can tackle cancer, other medical problems will not be too difficult.

~ Professor Yang Hongxia

PolyU plans to employ the Co-GenAI platform to further foster collaborative research and applications of GenAI across diverse fields, including urban energy, business transformation, smart manufacturing, robotics, intelligent clinical reasoning, grid modernisation, smart construction and smart materials.

“We open-source the training platform, with a long-term mission of making GenAI accessible to all. This will enhance intelligence across society and ensure its benefits are widely shared,” Professor Yang said.

Our team is committed to bridging the final mile in deploying large AI models, enabling businesses, hospitals, and government agencies to effectively leverage AI in real-world scenarios. Integrating domain-specific data and expertise into these models is key to their successful adoption.

~ Professor Yang Hongxia

Professor Yang cited two examples of mature project ideas. "One is an integrated training and inference system. It deeply integrates domestic computing platforms with ultra-low-resource algorithms, achieving collaborative hardware-software design to build an all-in-one system supporting both local training and inference services. The other is the establishment of a full-process community platform for GenAI. Empowered by blockchain-based collaboration and transparent incentive mechanisms, this platform will work with developers worldwide to advance GenAI and jointly build globally collaborative, high-quality foundational models."

Contributing to AGI

Professor Yang is also excited about PAAI’s readiness in laying a promising architectural step towards Artificial General Intelligence (AGI). AGI is a hypothetical future concept for an AI system that possesses human-like cognitive abilities across all domains. It is general-purpose and all-encompassing, aiming to match or surpass human intelligence.

It is widely believed that the Scaling Laws are key to achieving AGI. According to Scaling Laws for models, increasing model parameters, dataset size, and computational power leads to more advanced intelligence and complex cognitive capabilities. However, as models grow larger, concerns have emerged about diminishing returns and plateauing performance. Compounding this challenge is the scarcity of high-quality data for continued training.

In the past, merging multiple models into one was often based on empirical experience, and its effectiveness was difficult to predict. The concept of model merging—strongly advocated by the AI startup Thinking Machines Lab founded by Mira Murati, former CTO of OpenAI, together with several former management team members of OpenAI—has now been theoretically demonstrated for the first time by Professor Yang’s research team. Through rigorous mathematical derivation, the team proposed the Model Merging Scaling Laws, suggesting that there may be another possible path toward AGI via decentralised AI.

We are very excited about the potential of achieving AGI through decentralisation and model merging. PolyU is poised to provide the infrastructure to contribute to not only the scientific discovery in academia, but also the primary global goal for AGI.

~ Professor Yang Hongxia

Professor Yang Hongxia

Executive Director of PolyU Academy for Artificial Intelligence

Associate Dean (Global Engagement), Faculty of Computer and Mathematical Sciences

Professor, Department of Computing

Director of University Research Facility in Big Data Analytics

Prior to joining PolyU in July 2024, Professor Yang Hongxia served as Head of Large Language Models in the US at ByteDance, AI Scientist and Director at Alibaba Group, Principal Data Scientist at Yahoo! Inc., and Research Staff Member at the IBM T.J. Watson Research Center. Notably, she founded the foundation model teams at both Alibaba and ByteDance, establishing herself as a pioneer in Generative AI.

Professor Yang’s research in GenAI has gained support from industry partners such as Hong Kong Science and Technology Park, Alibaba, and Huashan Hospital affiliated to Fudan University. Her projects have received the funding support of the Theme-based Research Scheme 2025/26 under the Research Grants Council; the Research, Academic and Industry Sectors One-plus Scheme under the Innovation and Technology Commission of the Government of the Hong Kong Special Administrative Region of the People’s Republic of China; and the Artificial Intelligence Subsidy Scheme under Cyberport.