AI for Digital Health

Cover Story

Prof. Hualou Liang, Interim Head of the Division of AI and the Humanities, Chair Professor of Neuroscience and Artificial Intelligence, and Associate Director of University Research Facility in Behavioral and Systems Neuroscience, has recently joined the Faculty. We invited him to share insights into his pioneering research at the intersection of AI, neuroscience and healthcare.

Prof. Hualou Liang, Interim Head of the Division of AI and the Humanities, Chair Professor of Neuroscience and Artificial Intelligence, and Associate Director of University Research Facility in Behavioral and Systems Neuroscience, has recently joined the Faculty. We invited him to share insights into his pioneering research at the intersection of AI, neuroscience and healthcare.

We are living in a boom time for AI across humanities and technology, and one of the most exciting frontiers is neuroscience. At the intersection of AI and brain science, my lab has been pursuing several innovative directions: (1) developing machine learning methods for causal discovery in large-scale neuroscience data (spikes, LFP, EEG/MEG, and fMRI), (2) applying deep learning to predict brain age from multimodal neuroimaging (structural MRI, DTI, and resting-state fMRI), and (3) leveraging AI and natural language processing in healthcare. Each of these areas draws on unique strengths at PolyU—neuroscience, brain imaging, and language technology—showing how AI can accelerate discovery while bridging science, technology, and human health.

In this article, I’d like to spotlight one of our recent projects that explores how large language models (LLMs) can be used to predict dementia from speech alone (Agbavor and Liang, 2022). In this work, we harness the power of LLMs to represent and analyse everyday speech across different levels of language, with the goal of enabling earlier detection and diagnosis of Alzheimer’s disease (AD).

Early detection of the cognitive decline involved in Alzheimer’s Disease and Related Dementias in older adults living alone is essential for developing, planning, and initiating interventions and support systems to improve patients’ everyday function and quality of life. Conventional, clinic-based methods for early diagnosis are expensive, time-consuming, and impractical for large-scale screening. We propose to discover cost-effective and user-friendly language and behaviour markers signalling cognitive impairment and integrating these biomarkers into effective machine learning models for early detection of cognitive decline. We hypothesise that progressive cognitive impairment has elicited detectable changes in the way people talk and behave, which can be sensed by inexpensive and accessible sensors and leveraged by machine learning algorithms to build predictive models for quantifying the risk of AD.

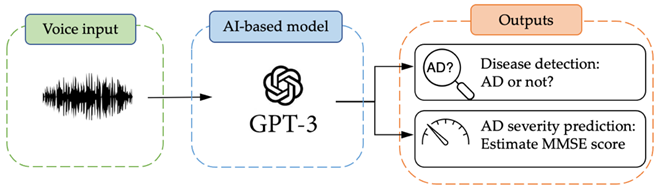

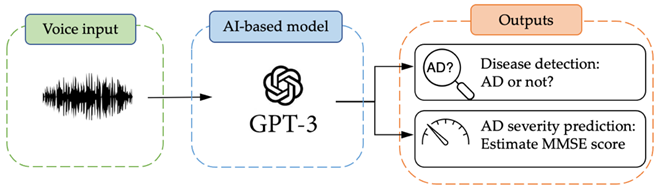

AD is a currently incurable brain disorder. Speech, a quintessentially human ability, has emerged as an important biomarker of neurodegenerative disorders like AD. By focusing on AD recognition using speech, we depart from neuropsychological and clinical evaluation approaches, as speech analysis has the potential to enable novel applications for speech technology in longitudinal, unobtrusive monitoring of cognitive health. Indeed, we show recently that GPT-3, a specific language model developed by OpenAI, could be a step towards early prediction of AD through speech (Figure 1). Specifically, we demonstrate that text embedding, powered by GPT-3, can be reliably used to (1) distinguish individuals with AD from healthy controls, and (2) infer the subject’s cognitive testing score, both solely based on speech data. Our results suggest that there is a huge potential to develop and translate a fully deployable AI-driven tool for early diagnosis of dementia and direct interventions tailored to individual needs, thereby improving the quality of life for individuals diagnosed with dementia. This work, since its publication, has received widespread press coverage, featured in over two dozen of media outlets such as Psychology Today, Jerusalem Post, The New York Times, and IEEE Spectrum.

Multilingual AD Identification through Speech. Although automated methods for detecting cognitive impairment with machine learning have attracted growing interest, most approaches have not examined which speech features can be generalised and transferred across languages for Alzheimer’s disease (AD) prediction. To our knowledge, no prior work has systematically explored the role of language features in multilingual AD detection. Our project addresses this gap by training models on English speech data and evaluating their performance on spoken Chinese data (Agbavor and Liang, 2024). To ensure privacy and security, we developed innovative on-premise, open-source Small Language Models (SLMs). These advances performed exceptionally well on the international stage, earning us both first and second place at the INTERSPEECH 2024 TAUKADIAL Challenge for Dementia Prediction.

Multimodal Machine Learning for AD Diagnosis. Our aim is to move beyond purely linguistic patterns and incorporate diverse types of data to strengthen AI-based assessment. As a natural next step, we plan to apply natural language processing and machine learning algorithms to extract language markers (linguistic and acoustic features), behavioural markers (such as eye-movement data), imaging markers (MRI), and genetic markers (e.g., the APOE gene), and then develop multimodal learning approaches to fuse this information. In this way, our work on computational large language models can be greatly enhanced by the outstanding expertise in Chinese and bilingual studies and language-related research at PolyU.

Our key innovation in applying LLMs lies in harnessing their vast semantic knowledge to generate text embeddings—vector representations of transcribed speech that capture the underlying meaning of the input. A fascinating open question, however, is how these embedding models encode linguistic features in individuals with Alzheimer’s disease compared to healthy controls. To address this, I would greatly welcome collaborations with domain experts in PolyU’s vibrant AI research community as well as with language scholars, to help decode the embedding models and uncover the critical factors that drive both their successes and failures in dementia prediction.

Key References:

Agbavor F, H Liang. Predicting dementia from spontaneous speech using large language models. PLOS Digital Health 1 (12), e0000168, 2022.

Agbavor F, H Liang. Multilingual prediction of cognitive impairment with large language models and speech analysis. Brain Sciences 14 (12), 1292, 2024.

Prof. Hualou Liang, Interim Head of the Division of AI and the Humanities, Chair Professor of Neuroscience and Artificial Intelligence, and Associate Director of University Research Facility in Behavioral and Systems Neuroscience, has recently joined the Faculty. We invited him to share insights into his pioneering research at the intersection of AI, neuroscience and healthcare.

Prof. Hualou Liang, Interim Head of the Division of AI and the Humanities, Chair Professor of Neuroscience and Artificial Intelligence, and Associate Director of University Research Facility in Behavioral and Systems Neuroscience, has recently joined the Faculty. We invited him to share insights into his pioneering research at the intersection of AI, neuroscience and healthcare.