Perception & SLAM

The visual perception and Simultaneous Localization and Mapping (SLAM) technology enhance the scenario of UAV application from outdoor environment (with GNSS) to indoor (GNSS denied) environment. Our group started the perception research in 2018. Currently, we have developed a complete and versatile SLAM framework for UAV.

Feedback Loop Based Visual Inertial SLAM

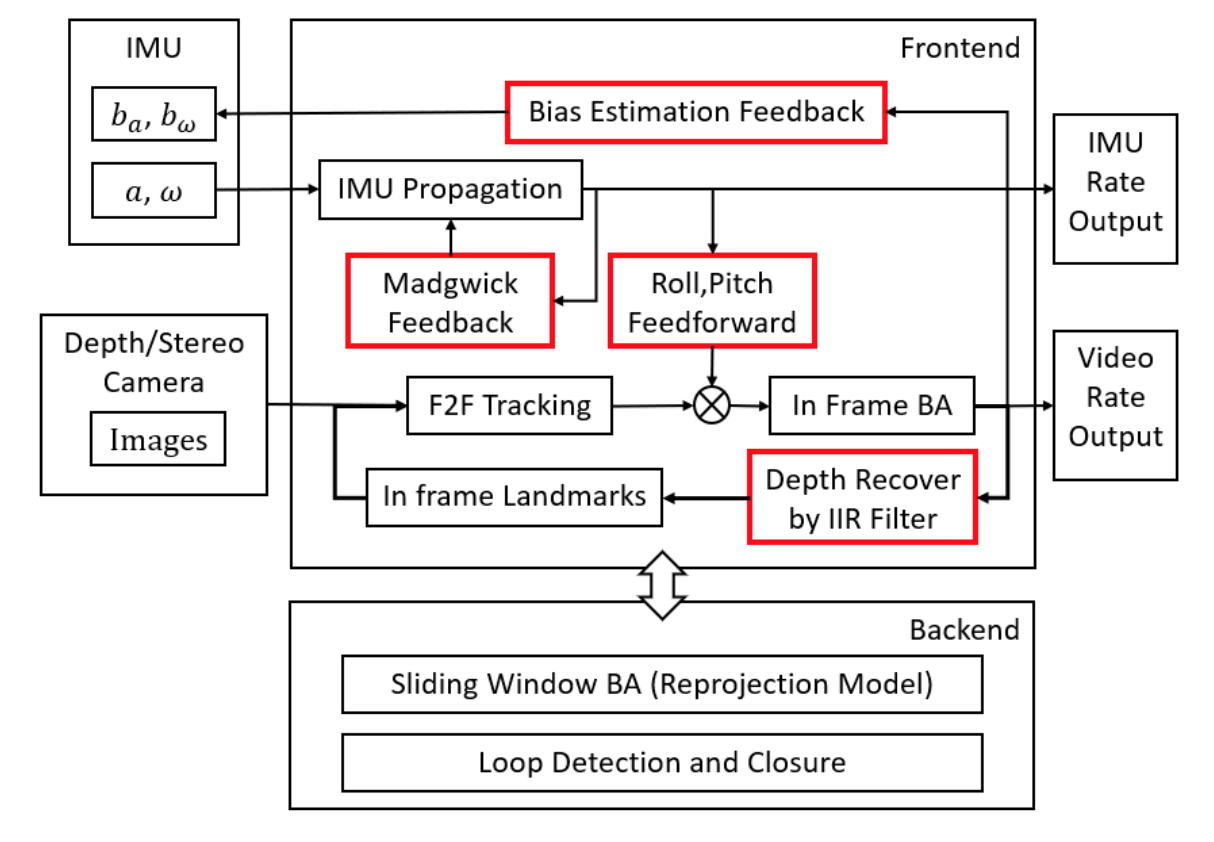

In this paper, we present a novel stereo visual inertial pose estimation method. Compared to the widely used filter-based or optimization-based approaches, the pose estimation process is modeled as a control system. Designed feedback or feedforward loops are introduced to achieve the stable control of the system, which include a gradient decreased feedback loop, a roll-pitch feed forward loop and a bias estimation feedback loop. This system, named FLVIS (Feedforward-feedback Loop-based Visual Inertial System), is evaluated on the popular EuRoc MAV dataset. FLVIS achieves high accuracy and robustness with respect to other state-of-the-art visual SLAM approaches. The system has also been implemented and tested on a UAV platform. The source code of this research is public to the research community.

MLmapping (Multilayer Mapping)

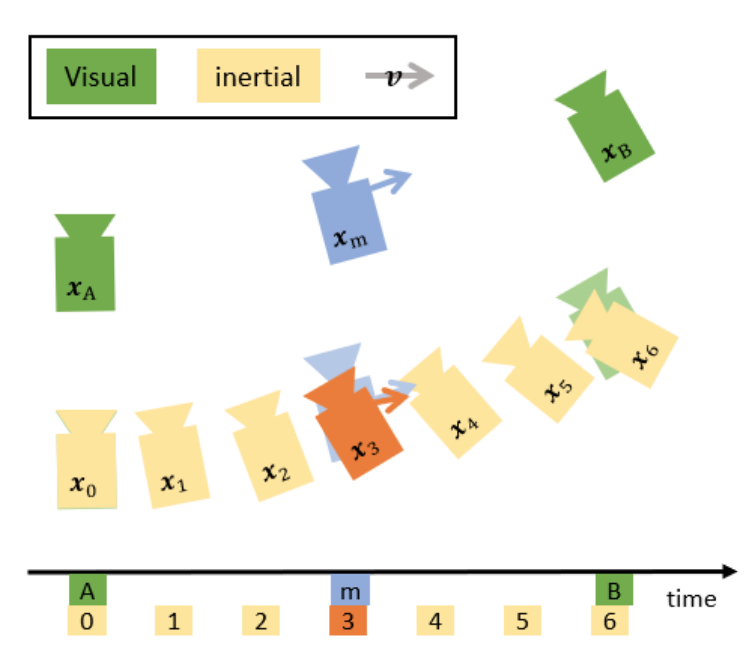

Mapping, as the back-end of perception and the front-end of path planning in the modern UAV navigation system, draws our interest. Considering the requirements of UAV navigation and the features of the current embedded computation platforms, we designed and implemented a novel multilayer mapping framework. In this framework, we divided the map into three layers: awareness, local, and global. The awareness map is constructed in cylindrical coordinate, enabling fast raycasting. The local map is a probability-based volumetric map. The global map adopts dynamic memory management, allocating memory for the active mapping area, and recycling the memory from the inactive mapping area. We implemented this mapping framework in three parallel threads: awareness thread, local-global thread, and visualization thread. Finally, we evaluated the mapping kit in both the simulation environment and the real-world scenario with the vision-based sensors. The framework supports different kinds of map outputs for the global or local path planners. The implementation is open-source for the research community.

End-to-End UAV Simulation for Visual SLAM and Navigation

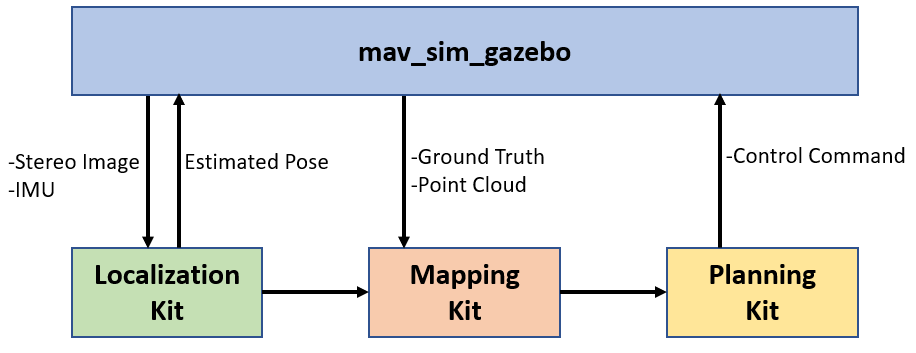

Visual Simultaneous Localization and Mapping (v-SLAM) and navigation of multirotor Unmanned Aerial Vehicles (UAV) in an unknown environment have grown in popularity for both research and education. However, due to the complex hardware setup, safety precautions, and battery constraints, extensive physical testing can be expensive and time-consuming. As an alternative solution, simulation tools lower the barrier to carry out the algorithm testing and validation before field trials. In this letter, we customize the ROS-Gazebo-PX4 simulator in deep and provide an end-to-end simulation solution for the UAV v-SLAM and navigation study. A set of localization, mapping, and path planning kits were also integrated into the simulation platform. In our simulation, various aspects, including complex environments and onboard sensors, can simultaneously interact with our navigation framework to achieve specific surveillance missions. In this end-to-end simulation, we achieved click and fly level autonomy UAV navigation. The source code is open to the research community.