Sensing Breakthroughs

Overcoming major hurdles to make artificial intelligence (AI) sensing systems more efficient

Talking to Siri. Clearing immigration and customs with a facial scan. Receiving meals from a restaurant service robot. Riding in a self-driving vehicle. The common denominator in all these new lifestyle trends is sensory AI.

Sensory AI transforms ordinary machines into entities that can see, hear, smell, taste, and feel, much like human do. By interpreting sensory information, these devices can understand their environment in a more holistic way, and perform complex tasks that require a nuanced perception of the world.

Stumbling blocks

Sensory systems are becoming an integral part of daily life, but several challenges hinder their development.

First, they consume significant power, making deployment difficult in resource-limited environments. Second, they often experience high latency, which is problematic for applications like autonomous vehicles that need to make split-second decisions or industrial automation systems that require immediate responses. Lastly, processing sensory data, especially for high-resolution vision and audio, demands substantial amount of memory and computational resources. This can be a significant obstacle when it comes to deploying sensory systems on edge devices.

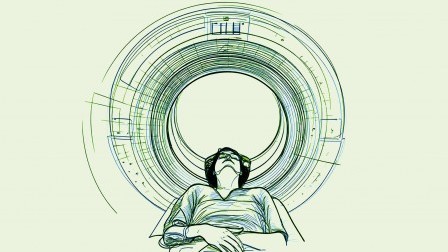

Professor Chai Yang, Associate Dean (Research) of the Faculty of Science and Chair Professor of the Department of Applied Physics, and his research team are breaking these barriers, winning renowned awards, locally and internationally, for their incessant effort.

Sensory systems’ high latency is a major obstacle hindering the development of autonomous vehicle.

Breaking the walls

The team has examined the concepts and implementation of bioinspired near-sensor and in-sensor computing and successfully reduced the amount of redundant data transmitted between sensing and processing units. This means large amounts of data can now be efficiently processed while consuming less power.

Inspired by natural sensory systems such as the human retina, Professor Chai and his team have offered a solution that avoids relying solely on backend computation. They have also developed sensors that can adapt to different light intensities and wavelengths, thus drastically reducing power consumption and latency. This approach improves machine vision systems used for identification tasks.

Professor Chai’s research is enabling the use of sensory AI in a wide range of real-time, mission-critical systems, such as autonomous vehicles that need to make instantaneous decisions. The focus is on exploring specialised hardware accelerators that can reduce inference latency to as low as microseconds.

His research also explores innovative in-sensor computing paradigm, and hardware-software co-design approaches. The idea is to reduce the transmission of huge amounts of data and to enable the execution of complex sensory AI models on resource-constrained platforms.

The hardware architectures and optimisation techniques he developed lay the foundations for the deployment of advanced sensory AI systems in mobile devices, IoT sensors and edge computing. These innovations will significantly enhance a wide range of applications in smart cities, from autonomous vehicles to industrial automation.

Professor Chai Yang (back, second left) and his team are revolutionising sensory AI by breaking down barriers in power consumption and latency.

Building on success

These outstanding findings have been published in high-impact journals including Nature Electronics, Nature Nanotechnology, and have been highlighted in Nature, IEEE Spectrum, among others.

Professor Chai is a receipt of the Falling Walls Science Breakthroughs in Engineering and Technology for his work on “Breaking the Wall of Efficient Sensory AI Systems”. Locally, he was awarded the BOCHK Science and Technology Prize in Artificial Intelligence and Robotics for his scientific discoveries. He is also an IEEE Fellow, an Optica Fellow, and a NSFC Distinguished Young Scholar.

The Professor is now looking for innovative ways to harness these achievements. He envisions developing cutting-edge microelectronic and nanoelectronic devices with new functionalities. “My long-term goal is to create imaging technology capable of perceiving three-dimensional depth, four-dimensional spatial-temporal and multiple spectral information, employing bioinspired mechanisms to reduce power consumption and latency,” he said.