Prof. Yang Chai

News

Welcome to ISMC2024!

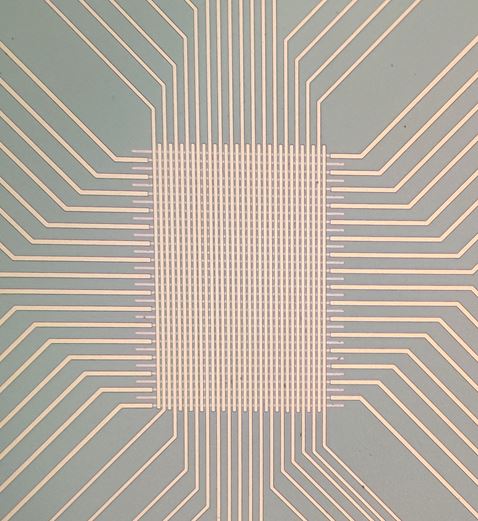

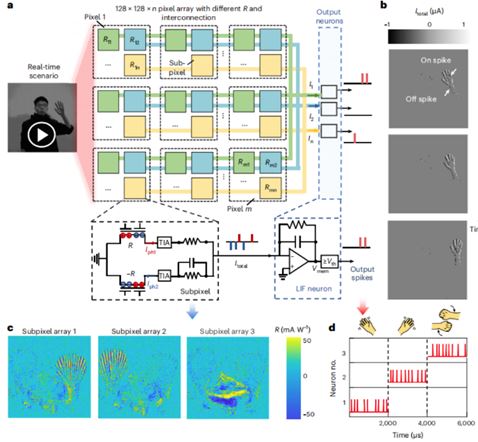

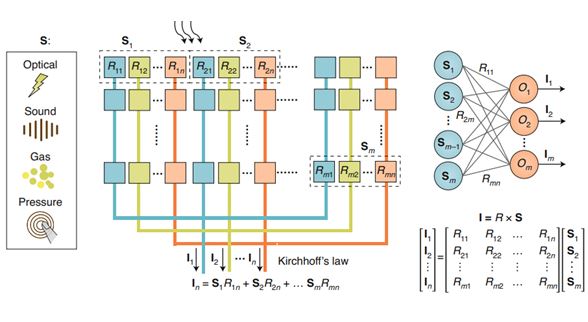

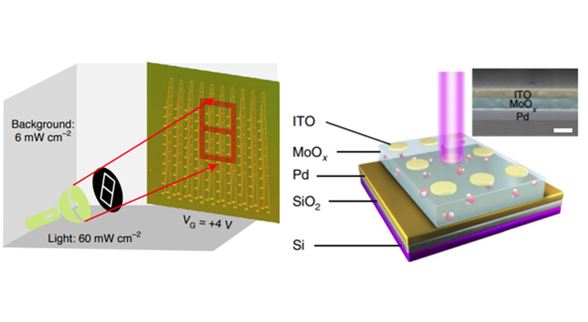

Computational event-driven vision sensors for in-sensor spiking neural networks

Research Direction

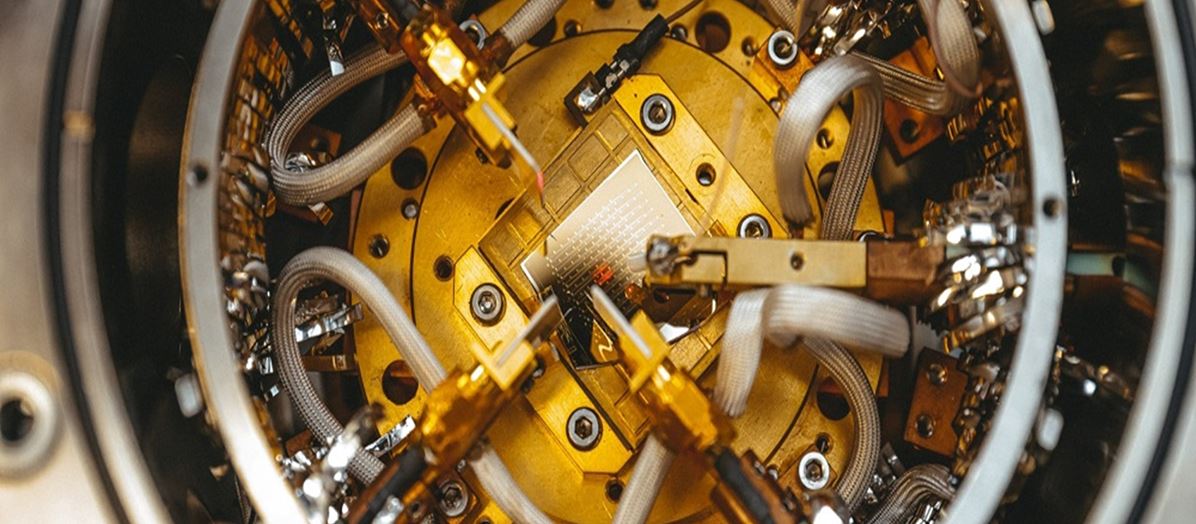

Near-sensor and In-sensor Computing

Emegring Memories