Self-paced reading experiment on SOME and MOST

This is not a real paper; it is an informal writeup of an unpublished experiment. The research was conducted in 2012 at Peking University (while I was a student at University of Kansas and was receiving support from there as well) and this report was written in 2014. See My file drawer for an explanation of why I'm recording this. Comments and questions are welcome. —Stephen Politzer-Ahles

1 Introduction

In Politzer-Ahles (2012) I tested whether SOME implies NOT MOST in addition to NOT ALL. I suspected that, in a sentence like [the Mandarin equivalent of] "Some of the people are eating hot dogs", the last word would be verified faster when the sentence followed a picture in which fewer than half of the people are eating hot dogs, compared to a picture in which more than half (i.e., most) are eating hot dogs. This prediction was not borne out—these conditions showed no difference. I hypothesized that the failure to find an effect might be attributable to two factors: 1) speeded verification may not have been an appropriate task; and 2) MOST was not used in the fillers, and thus was not a relevant lexical alternative. The present study attempted to address these by using self-paced reading, and including MOST in the fillers. Other than these changes and the new sample of participants, the methods were nearly the same as in Politzer-Ahles (2012).

2 Methods

2.1 Participants

Data were collected from 40 native speakers of Mandarin residing in Beijing; most were current or former students at Peking University. Many of the participants were bilingual in Standard Mandarin and a local language or dialect, but all participants reported that Standard Mandarin was the language they acquired earliest and the one they use most. All had normal or corrected-to-normal vision. All participants provided their informed consent to participate in the study and received payment, and all methods were approved by the Ethics Committee of the Department of Psychology, Peking University, and the Human Subjects Committee of Lawrence at the University of Kansas.

2.2 Materials and design

Twenty-four sentences and matching pictures were created to serve as the critical stimuli; they were adapted from Politzer-Ahles et al. (2013: Experiment 2), and the sentences were modified where necessary such that each followed the form "图片里 (In the picture), / 有的 (some of the) / <subjects> / <verb> / <object> / <...>" (slashes indicate how the sentences were divided into regions). The sentences were in Mandarin Chinese. The objects were all bimorphemic words written with two characters, and had a cloze probability of 100% when preceded by matching pictures in a sentence completion test (Politzer-Ahles et al., 2013: Experiment 2). Each sentence had three regions following the critical object, and either four or five regions preceding it. The sentences used either quantifier-subject-verb-object word order (e.g., "Some of the boys are eating hot dogs...") or locative inversion (e.g., "above some of the houses are floating clouds..."; in Chinese, the prepositional phrase in this sentence follows the quantifier-noun phrase rather than preceding it), and the verbs were all marked for aspect.

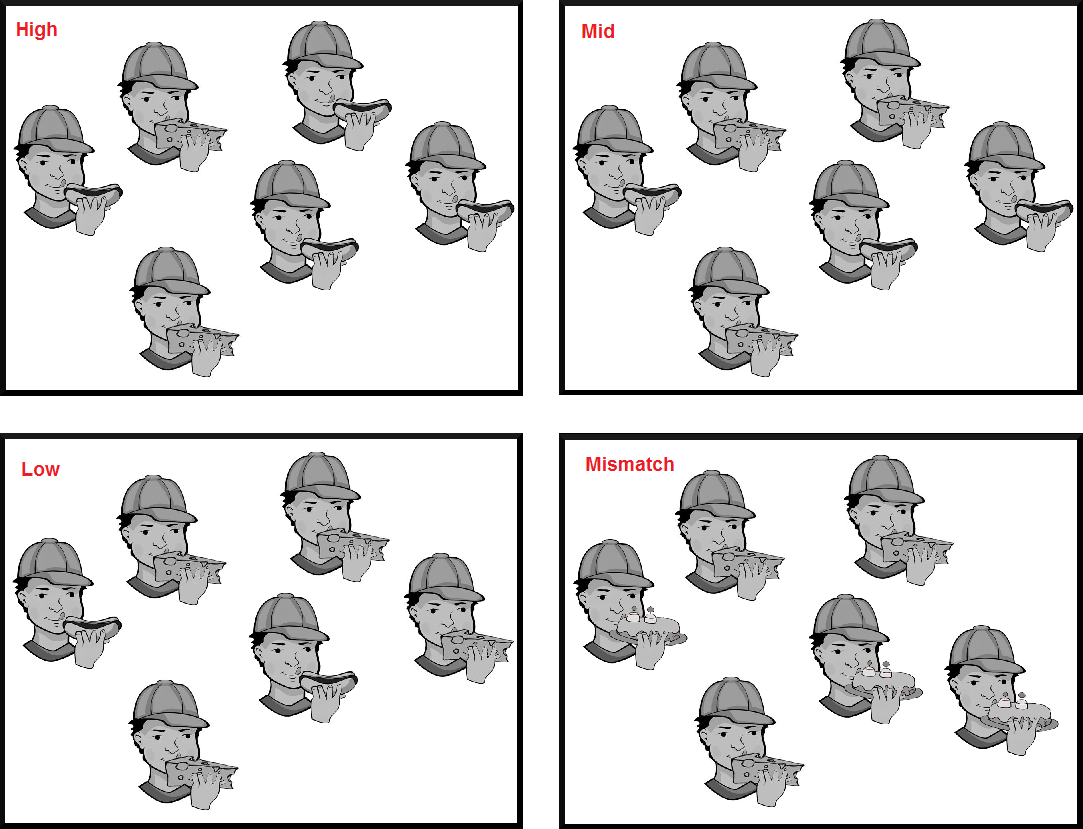

Four pictures were made to complement each sentence (see Figure 1). Each picture was a "some"-type array (i.e., included actors that were doing different things and could thus be described using a SOME sentence, as opposed to a picture made up of actors that were all doing the same thing and would be described using an ALL sentence) and included six actors or items. In the picture array corresponding to the High condition, four of the actors (the majority) are interacting with the object that is ultimately mentioned in the sentence, and two are interacting with some other object. In the Mid picture array, three of the actors pictured are interacting with that object and three with another object; in the Low picture array two are interacting with that object and four with another. In the Mismatch picture array, different proportions of actors are interacting with different objects (four-two, three-three, or two-four; 16 of each type, randomly distributed across the different sets), but none of the objects are those that are mentioned in the sentence. The reason for including six actors in each picture array was to ensure that the picture array in the Low condition would have at least two of the low-occurring item (because SOME might also imply more than one, picture arrays in which the low-occurring item only appears once were not used) and that the difference in number between low- and high-occurring items would be salient (in a picture array with five actors/items, the difference between two items and three items may be difficult to notice). Thus, there were three conditions with matching objects that represent the majority, minority, or half of the objects in the picture, and one condition with entirely mismatching objects; the sentences remained identical across all conditions, while the picture arrays varied.

Figure 1. A sample picture set to go with the sentence 有的人在吃热狗 ("Some of the people are eating hot dogs.") The conditions formed by each picture context are, from the upper-left going clockwise, High, Mid, Low, and Mismatch. On a given trial only one of these four pictures would be presented.

The 24 experimental items were interspersed with 124 fillers of the following types: 18 ALL sentences correctly paired with ALL-type picture arrays; 3 ALL sentences with objects that mismatched the object in the picture array; 3 ALL sentences with verbs that mismatched the action in the picture array; 12 ALL sentences incorrectly with SOME-type picture arrays (3 uneven arrays and 9 even arrays); 13 MOST sentences correctly paired with uneven picture arrays; 3 MOST sentences incorrectly paired with ALL-type picture arrays; 3 MOST sentences incorrectly paired with uneven picture arrays (where the object referred to in the sentence corresponded to the minority rather than the majority object); 6 MOST sentences incorrectly paired with even picture arrays; 3 MOST sentences with mismatching objects; 3 MOST sentences with mismatching verbs; 12 SOME sentences incorrectly paired with ALL-type picture arrays (e.g., underinformative sentences); 6 SOME sentences with mismatching verbs; 23 sentences using the existential quantifier 有些 (yǒu xiē, "there are some"; 5 of these sentences were correctly paired with ALL-type picture arrays, 6 with even SOME-type arrays, and 12 with uneven SOME-type arrays); 8 existential quantifier sentences with mismatching objects; and 8 existential quantifier sentences with mismatching verbs. The fillers were designed such that each quantifier and each "some"-type picture array (e.g., even and uneven arrays) was associated with a "consistent" response about 50% of the time.

2.3 Procedure

Participants were seated comfortably in a brightly lit room, in front of a CRT monitor. The pictures and sentences were presented at the center of the screen using the Presentation software package (Neurobehavioral Systems). Each trial began with a picture array presented in the center of the screen (at 768x576 pixels on a 1024x768px screen) for 6000 ms to ensure that subjects had time to carefully inspect the picture and fully process the relative proportions of different objects. After the picture array disappeared, it was immediately followed by a blank screen of random duration between 250 and 750 ms, after which the presentation of the sentence began immediately. The sentences were presented in the center of the screen in 22pt SimSun font using word-by-word, non-cumulative moving window self-paced reading paradigm (Just et al., 1982). In this paradigm, every sentence is masked by a series of dashes equal in length to the masked word; the masking includes words and punctuation, but preserves the spaces between words. Each time the participant clicks a button on a gamepad to move along the display, the next word in the sentence is displayed and the previously-displayed word is masked again.

When the participant finished the sentence, after a 250-750 ms blank screen they were presented with a 7-point rating scale from "consistent" (一致) to "inconsistent" (不一致); the direction of the scale (i.e., whether "consistent" appeared on the left or the right) was counterbalanced across participants. The participants' task was to judge whether or not the sentence just presented was consistent with the picture array. Because the fillers included underinformative sentences, which can be interpreted as consistent or as inconsistent with the context (Noveck & Posada, 2003; Bott & Noveck, 2004; Tavano, 2010), participants were given explicit instructions before the experiment began that what the experimenters were interested in was the participants' own linguistic intuitions and that for some trials there would be no right or wrong answer. Participants chose a point value from the scale and submitted their responses using a gamepad. The trial ended with the participant's response, and the screen remained blank for 750-1250 ms, after which time the next trial began.

The 24 critical stimuli were organized into four lists in a Latin square design, such that a given item appeared in a different condition in each list, no item was repeated within a list, and each list included 6 items per condition. The remaining 124 filler items were included in each list. The order of the items was pseudorandomized by the experimental control program at runtime for each participant, such that the first 16 trials were filler trials (selected such that half were consistent trials and half were inconsistent, and a variety of sentence types, picture types, and violation locations was sampled) and the remaining trials were fully randomized. Participants were given three breaks during the experiment (once every 37 trials). Participants completed a brief practice session of 2 trials (one consistent and one with an inconsistent verb) before the main experiment. The entire experiment, including practice and breaks, took about 40 minutes.

3 Results

3.1 Consistency ratings

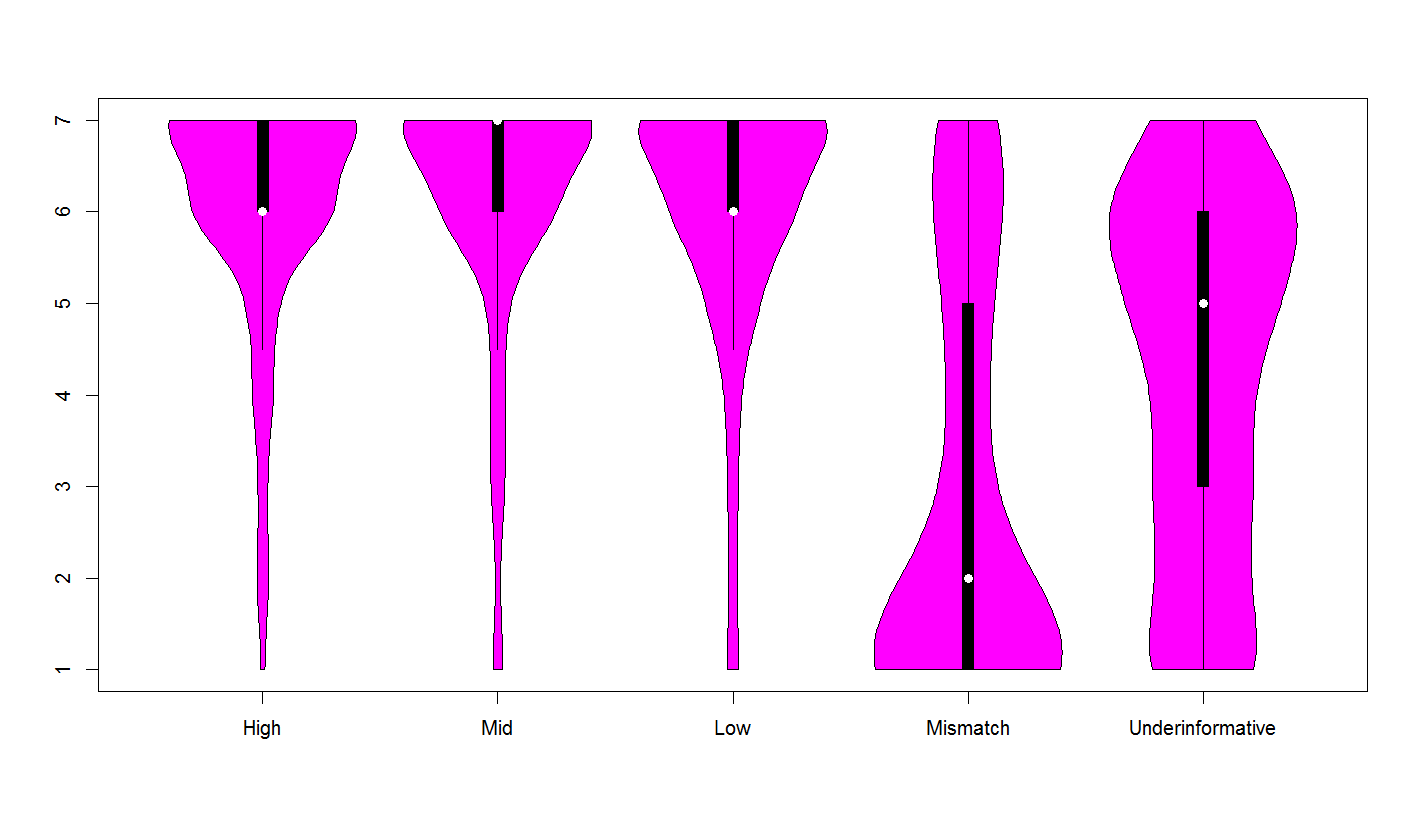

A violin plot of consistency ratings to the critical sentences is shown below. Also shown are consistency ratings to underinformative sentences: SOME sentences paired with an ALL picture.

A few things can be noted from this plot:

- The Low condition was not substantially more acceptable than High, counter my expectation;

- While the ratings for Mismatch were low enough to indicate that participants did indeed comprehend the sentences and attend to the task, the ratings are still probably higher than would be expected.

A linear mixed model (with random intercepts for participants and a nuisance predictor for trial order in the experiment), on only the critical items (not the underinformative or other fillers), with the High condition as the baseline, showed that there was a significant effect of Condition, and that Mismatch had lower ratings than the other conditions (which did not differ from one another):

> anova(Baseline, Model)

Data: r

Models:

Baseline: response ~ trialnumber + (1|subj)

Model: response ~ condition + trialnumber + (1|subj)

Df AIC BIC logLik deviance Chisq Chi Df Pr(>Chisq)

m1 4 4161.7 4181.2 -2076.9 4153.7

m0 7 3645.4 3679.5 -1815.7 3631.4 522.29 3 < 2.2e-16 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

> summary(Model)

Linear mixed model fit by REML ['lmerMod']

Formula: response ~ condition + trialnumber + (1|subj)

Data: r

REML criterion at convergence: 3652.868

Random effects:

Groups Name Variance Std.Dev.

subj (Intercept) 0.1142 0.3379

Residual 2.5485 1.5964

Number of obs: 956, groups: subj, 40

Fixed effects:

Estimate Std. Error t value

(Intercept) 6.1137293 0.1624359 37.64

conditionLow -0.0748086 0.1462100 -0.51

conditionMid 0.0494184 0.1462192 0.34

conditionMismatch -3.1636650 0.1462562 -21.63

trialnumber -0.0002397 0.0013895 -0.17

3.2 Reading times

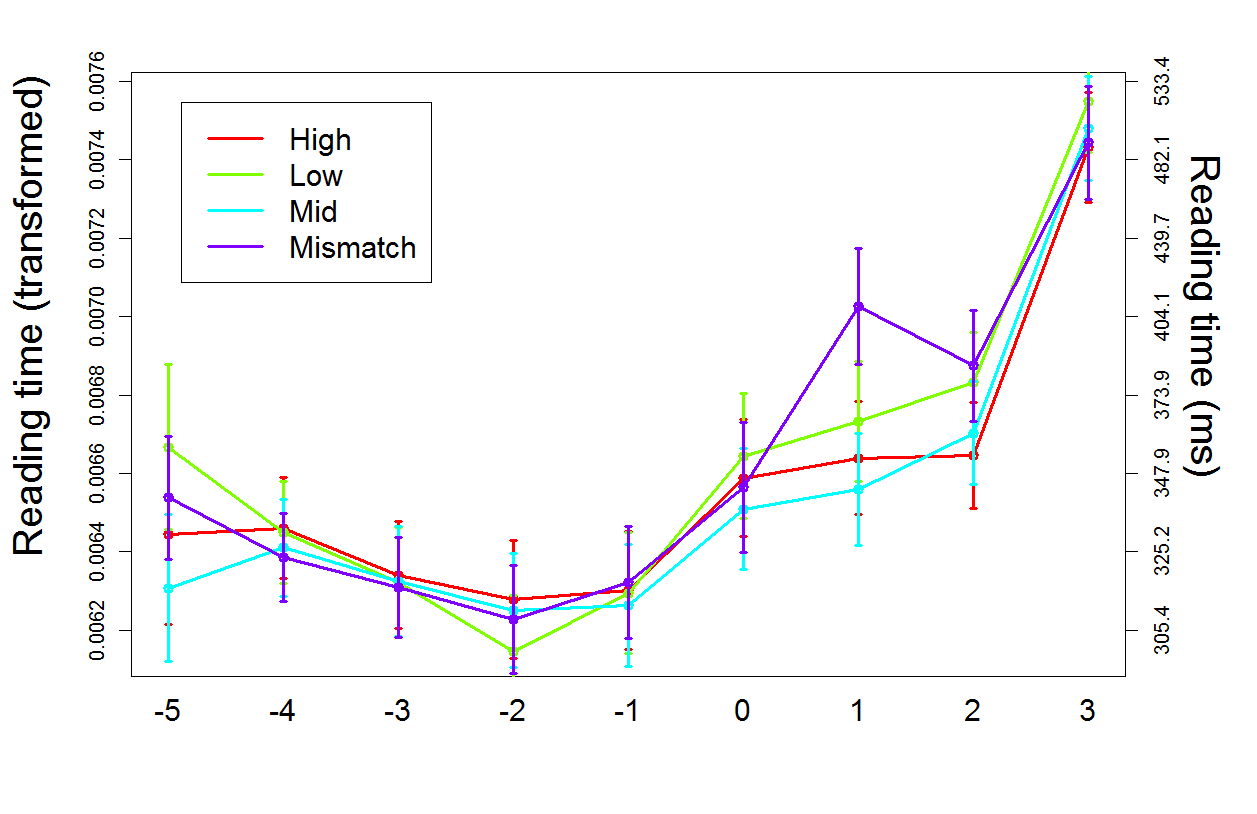

For the critical sentences, observations with reading times of under 200 ms or over 1500 ms were removed, leaving 7863 observations in the analysis. (Filler sentences were not included in the analysis.) Data were transformed to reflected reciprocals in order to correct for skewness. Reading times are plotted below. Error bars refer to ± 2 standard errors of the dataset. "0" on the x-axis represents the critical object DP. Similar to Politzer-Ahles (2012), a slowdown (in the spillover segments) was only robustly observed in the object Mismatch conditions; no spillover was observed for High-proportion trials relative to Low-proportion trials.

Statistical analysis was done using linear mixed effects with crossed random intercepts for participants and items. First, a model (using only observations from the critical segment and the following three segments) tested the interaction between Segment and Condition (while also including nuisance variables for the trial order within the experiment, and the number of characters and strokes within each segment). The interaction was significant:

> anova( No_int, Int ) Data: crit Models: No_int: RT ~ Order+NumChars+Strokes+ Segment+Condition + (1|Subject) + (1|Item) Int: RT ~ OrderInExp+NumChars+Strokes + Segment/Condition + (1|Subject) + (1|Item) Df AIC BIC logLik deviance Chisq Chi Df Pr(>Chisq) mb 18 -89752 -89627 44894 -89788 m 42 -89769 -89476 44926 -89853 64.639 24 1.375e-05 *** --- Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Next I ran a linear model at each segment of interest (the critical segment and the following three segments). "High" was the baseline condition. "NumChars" was not included as a nuisance predictor in the critical region, since all critical words were 2 characters. The model results are shown below (I have added asterisks to coefficients of interest where t > 2):

> summary(Segment0)$coefficients

Estimate Std. Error t value

(Intercept) 6.864530e-03 1.795278e-04 38.2365878

OrderInExp -4.742563e-06 6.900586e-07 -6.8726972

TotalStrokes 7.225308e-06 4.433971e-06 1.6295343

ConditionLow 5.811021e-05 7.169531e-05 0.8105162

ConditionMid -6.635501e-05 7.147635e-05 -0.9283492

ConditionMismatch 3.751311e-05 7.211453e-05 0.5201880

> summary(Segment1)$coefficients

Estimate Std. Error t value

(Intercept) 6.793528e-03 1.636925e-04 41.5017703

OrderInExp -4.690226e-06 6.754006e-07 -6.9443617

TotalStrokes 6.167231e-06 6.127667e-06 1.0064565

NumChars 8.769830e-05 5.759209e-05 1.5227489

ConditionLow 8.956936e-05 7.024141e-05 1.2751646

ConditionMid -6.903232e-05 7.048281e-05 -0.9794206

ConditionMismatch 4.192654e-04 7.034970e-05 5.9597330 *

> summary(Segment2)$coefficients

Estimate Std. Error t value

(Intercept) 7.034320e-03 1.841457e-04 38.1997450

OrderInExp -5.254555e-06 6.585891e-07 -7.9785023

TotalStrokes 5.165245e-06 7.687652e-06 0.6718884

NumChars -1.227760e-05 9.251959e-05 -0.1327027

ConditionLow 1.832221e-04 6.841159e-05 2.6782313 *

ConditionMid 6.180803e-05 6.842951e-05 0.9032366

ConditionMismatch 2.754156e-04 6.910604e-05 3.9854057 *

> summary(Segment3)$coefficients

Estimate Std. Error t value

(Intercept) 8.152980e-03 3.428724e-04 23.7784644

OrderInExp -6.716841e-06 7.268655e-07 -9.2408309

TotalStrokes 9.587043e-06 1.052159e-05 0.9111777

NumChars -9.060783e-05 1.196884e-04 -0.7570313

ConditionLow 1.207731e-04 7.402046e-05 1.6316179

ConditionMid 3.542746e-05 7.404778e-05 0.4784406

ConditionMismatch 7.466956e-05 7.633407e-05 0.9781944

In short: for the first and second segment after the critical word, the Mismatch condition was read more slowly than others. In the second spillover region, the Low condition was also read somewhat more slowly than High and Mid (counter my predictions).

4 Discussion

As in Politzer-Ahles (2012), no behavioral evidence was found for a SOME +> NOT MOST implicature: participants processed more-than-half sets referred to by SOME as easily as they processed less-than-half sets referred to by SOME. Rather than concluding that this implicature does not occur in the same way as SOME +> NOT ALL, however, I believe it is more likely that this is an artifact of experimental design. Firstly, there is other experimental evidence that this implicature does influence rating data, at least offline (Degen & Tanenhaus, 2014; Zhan, Jiang, Politzer-Ahles, & Zhou, in preparation), and neural activation (Zhan, Jiang, Politzer-Ahles, & Zhou, in preparation). Secondly, in the present experiments the subsets (2, 3, or 4 of something—e.g., 2 people eating hot dogs and 4 eating cheese) all fell within the subitizing range, which probably does not behave the same (in terms of interpretations of SOME) as larger numbers of things, or mass nouns, behave (Degen & Tanenhaus, 2014; Breheny, Ferguson, & Katsos, 2012). Finally, it is still possible that reading times in the present paradigm are just not sensitive to these meaning differences, and/or that potential differences between the quantifier conditions are masked by a floor effect. Visual world eye-tracking, with stimuli that are not in the subitizing range (e.g., 80 blue dots and 20 red dots; or a screen that is 80% blue and 20% red) might be more appropriate.

Finally, even if it were found that the SOME +> NOT MOST implicature did not have the same pyschological status as SOME +> NOT ALL, this would not necessarily be a challenge to a Gricean-based model of online realization of scalar inferences. This is because, while MOST is a potential alternative to SOME, it might not be a relevant alternative. Geurts (2010) provides a relevant example of two Horn scales:

- <animal, dog>

- <animal, dog, beagle>

For an utterance like "I saw a an animal on the lawn this morning", the first scale (indicating that dog is a stronger expression than animal), allows us to derive the implicature that the speaker does not know whether the animal was a dog. But for an utterance like "I saw a dog on the lawn this morning", the speaker is not very likely to infer that the speaker doesn't know whether the dog was a beagle (assuming many contextual factors, such as that we are in a culture where it can be reasonably assumed that everyone knows what a beagle looks like, and we are not in the middle of a dog show or hearing a conversation between people who are very into dogs). In other words, even though beagle is a stronger expression than dog in the scale <animal, dog, beagle>, it is not a salient or relevant alternative (in the context we are assuming in this example) because it's not at the level of specificity that's expected in the discourse. We could imagine a similar situation for <SOME, MOST> : even if MOST is a stronger expression, the hearer might not consider it a relevant alternative, and thus will not make the inference that rules it out.