Listen to the following two sound files. What do you notice about them?

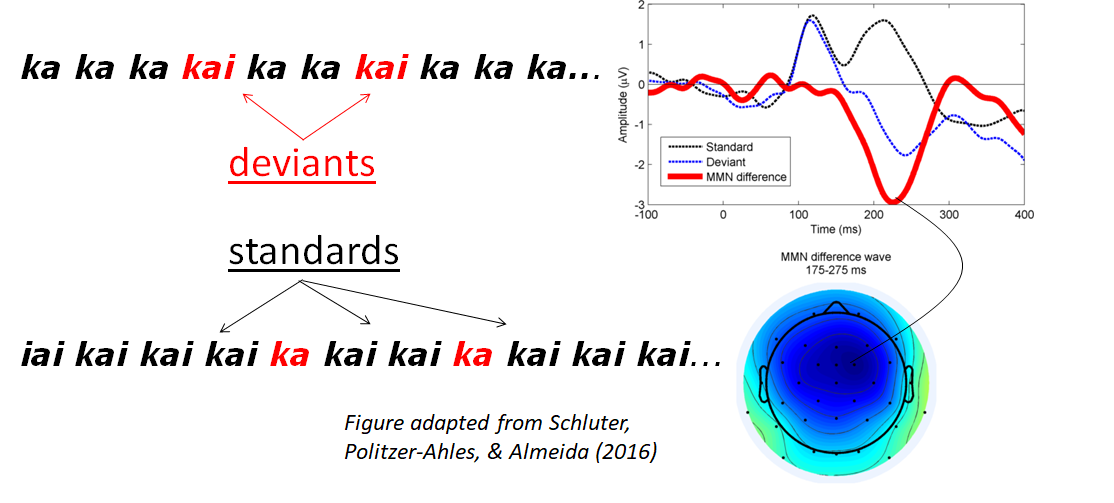

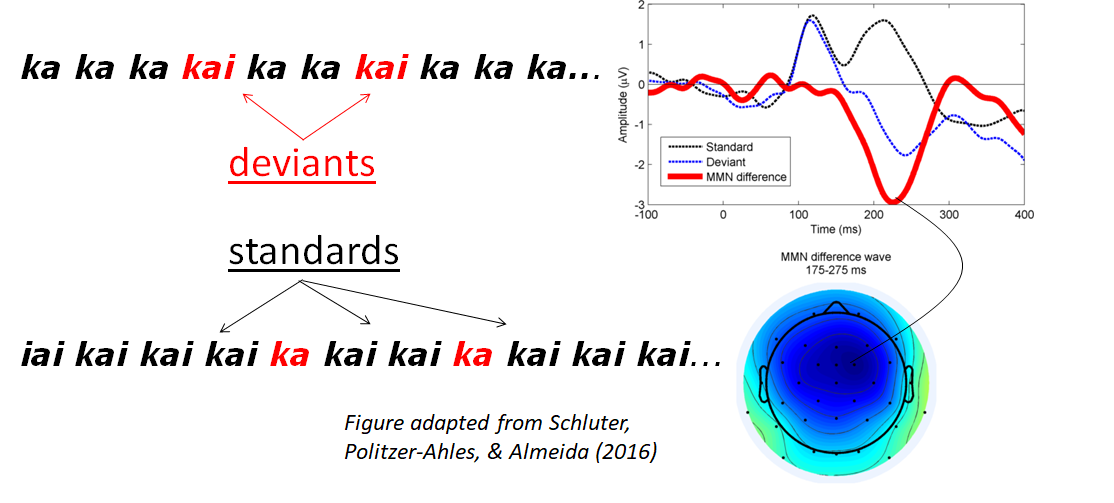

This is something called an oddball paradigm. In each recording, there is one sound that's played many times, and one sound that's played rarely. The sound played a lot is called the standard, and the sound played rarely is called the deviant.

In this kind of situation, people are often good at noticing the difference between these sounds, even if they're not paying attention. We know this because of an ERP component called the mismatch negativity (MMN for short). The mismatch negativity is calculated in the following way:

This concept is illustrated in the figure below with real ERP data.

As you can see from the figure above, the brainwave elicited by hearing kai as a deviant (the blue dotted line) is much more negative than the brainwave elicited by hearing kai as a standard (the black dotted line) about 200 milliseconds after hearing the sound. Thus, the {deviant minus standard} difference (the thick red line) is very negative around 200 milliseconds. This is the mismatch negativity. This ERP component appears when people listen to sounds in an oddball arrangement, even if they aren't paying attention (e.g., if they are watching a movie at the same time); it shows that the person's brain has somehow automatically detected the difference between ka and kai.

There are a few things that can make the MMN bigger or smaller:

One of the cool things about the MMN is that it can abstract away from surface variation and detect patterns of difference. For example, Phillips and colleagues (2000) had people listen to sequences of sounds like this:

ba da da ga da ba ga ta da ga ba ba ga ba da da pa ga ka ba da ga ba ga da ga ga ta...

On first glance, this doesn't look like an oddball paradigm: there's not an obvious "standard" (one sound that's presented a lot). Look more closely, though. Can you figure out what the standard and deviant are? Think about it for a bit before you scroll down to see the answer...

.

..

...

....

...

..

.

..

...

....

...

..

.

You might have noticed that most of the sounds are voiced (or at least unaspirated) sounds, like "ba", "da", and "ga". Only a few are voiceless aspirated sounds (like "pa", "ta", and "ka"). So, even though there's not a single sound that's presented a lot, there's a sound category (unaspirated sounds) that is presented a lot, and works as a standard; and there's another sound category (aspirated sounds) that's presented rarely, and works as a deviant. In this experiment, they indeed found that an MMN was elicited; that's great evidence that your brain can ignore the variations across "ba", "da", and "ga", and just pick up on the generalization that they're all unaspirated, and then it can get surprised when it suddenly hears an aspirated sound. Wang and colleagues (2012) and me et al. (2016) did something very similar to this but with Mandarin tones.

People can go pretty nuts with this; for a really extreme example of MMN abstracting across lots of variation in the stimuli, see Pakarinen et al. (2010).

But I haven't yet told you the coolest thing of all. The MMN is also sensitive to your linguistic experience; people with different language backgrounds can have different MMNs. One famous demonstration of this comes from Phillips and colleagues (2000); it's very cool but explaining it requires a lot of phonetics background, so let's skip it for now (but if you're interested, you can read that paper, it's great). The dopest demonstration of this ever is by Kazanina and colleagues (2006). They had people listen to oddball paradigms including [t] standards and [d] deviants. But their experiment had an interesting twist: they did it with both Russian participants and Korean participants. Why would they do that?

While Russian and Korean both have the sounds [t] and [d], the phonological status of the sounds in these languages are different. In Russian, they come from different phonemes: changing a [t] to a [d] will make a different word (for example, in Russian, [tom] means "volume" and [dom] means "house"). In Korean, simplifying a bit, these sounds are (to use terms from phonology) allomorphs of the same phoneme: in other words, changing a [t] to a [d] won't create a different word, [t] and [d] are just different ways of pronouncing the same thing in different word positions. At the beginning of a word things get pronounced [t], but in the middle of a word (between two vowels) they get pronounced [d]. For example, [tarimi] in Korean means "iron", but there is no word [darimi]; likewise, [pada] means "sea", but there is no word "pata". Thus, according to phonological theory, in a Russian speaker's mind [t] and [d] are "different sounds", whereas in a Korean speaker's mind they are "two different versions of the same sound". In the experiment, Kazanina and colleagues found that Russian speakers hearing "ta ta ta ta ta da ta ta ta da..." got a big MMN, whereas Korean speakers hearing the exact same thing did not. (They also did a control experiment with different, non-linguistic sounds, in which both Russian and Korean speakers got big MMNs; this rules out the possibility that the Korean speakers just had hearing problems or something.) What this shows is that the MMN is sensitive to the language-specific way that you automatically organize sounds into different categories as you hear them: a Russian speaker hears "ta" and considers it to be in one category and then hears "da" and considers it a different thing, and thus gets an MMN, whereas a Korean speaker treats both as different versions of the same thing, and thus doesn't get much MMN.

Overall, the MMN is useful because it's a simple and well-understood brain response which can be used to show whether or not people can detect differences, including complex linguistic differences. Thus, it has all the features we want in an ERP component: it's easy to elicit, and we already have a good understanding of what makes it appear and disappear (and what makes it get bigger and smaller), but still it can be used to test quite abstract and complicated linguistic questions.

To finish this task, answer either one of the following questions.

Remember that, to complete this module, you need to do three of the extra activities, and they should cover at least two different neurolinguistic methods (i.e., you can't pass the module by just doing three tasks about EEG). If you haven't finished three yet, you can choose another from the list below:

When you have finished three extra activities, you are done with the module (assuming all your work on this and the previous tasks has been satisfactory). If you are interested in leading a discussion on this module, you can go on to see the suggested discussion topics. Otherwise, you can return to the module homepage to review this module, or return to the class homepage to select a different module or assignment to do now.

by Stephen Politzer-Ahles. Last modified on 2021-07-15. CC-BY-4.0.